As the Internet of Things (IoT) grows rapidly, huge amounts of wireless sensor networks emerged monitoring a wide range of infrastructure, in various domains such as healthcare, energy, transportation, smart city, building automation, agriculture, and industry producing continuously streamlines of data. Big Data technologies play a significant role within IoT processes, as visual analytics tools, generating valuable knowledge in real-time in order to support critical decision making. This paper provides a comprehensive survey of visualization methods, tools, and techniques for the IoT. We position data visualization inside the visual analytics process by reviewing the visual analytics pipeline. We provide a study of various chart types available for data visualization and analyze rules for employing each one of them, taking into account the special conditions of the particular use case. We further examine some of the most promising visualization tools. Since each IoT domain is isolated in terms of Big Data approaches, we investigate visualization issues in each domain. Additionally, we review visualization methods oriented to anomaly detection. Finally, we provide an overview of the major challenges in IoT visualizations.

Internet of Things (IoT) has become one of the most emerging and powerful technologies that is used to improve the quality of life. IoT connects together a great number of heterogeneous devices in order to dynamically acquire various types of data from the real-world environment. IoT data is used to mine useful information that may be used, by context-aware applications, in order to improve people’s daily life. As data is typically featured with contextual information (time, location, status, etc), IoT turns into a valuable and voluminous source of contextual data with variety (several sources), velocity (real-time collection), veracity (uncertainty of data) and value. The cooperation of Big Data and IoT has initiated the development of smart services for many complex infrastructures. As IoT develops rapidly, Big Data technologies play a critical role, as visual analytics tools, producing valuable knowledge in real-time, within the IoT infrastructures, aiming in supporting critical decision making. Large-scale IoT applications employ a large number of sensors resulting in a very large amount of collected data. In the context of IoT data analysis, two tasks are of relevance: exploring the large amounts of data to find subsets and patterns of interest, and; analyzing the available data to make assessments and predictions. This paper will exploit ways to gain insight from IoT Data using meaningful visualizations. Visual analytics is an analysis technique that can assist the exploration of vast amounts of data by utilizing data mining, statistics, and visualization. Interactive visualization tools combine automated analysis and human interaction allowing user control during the data analysis process, aiming in producing valuable insight for decision making. They involve custom data visualization methods that enable the operator to interact with them, in order to view data through different perspectives and focus on details of interest. Data analytics methods involve machine learning and AI methods, to automatically extract patterns from data and make predictions. AI methods are usually untrustworthy to their operators, due to their black-box operation that does not provide insight into the accuracy of their results. Visual analytics can be used to make AI methods more transparent and explainable, visualizing both their results and the way they work.

Visual Analytics

Visual Analytics is a data analysis method that employs data mining, statistics, and visualization. Besides automated analysis, implementations of visual analytics tools combine human interaction allowing user control and judgment during data analysis, in order to produce valuable insight for decision making. Over the years, numerous research studies on visual analytics were conducted. Most of them deal with a conventional visual analytics pipeline originally presented by Keim et al which depicts the visual analytics process. As figure1 illustrates, the visual analytics process starts performing data transformation subprocesses, such as filtering and sampling, that modify the data set into representations enabled for further exploration. To create knowledge, the pipeline adopts either a visual exploration method or an automatic analysis method, depending on the specific use case. In the case of automatic analysis, data mining methods are applied to assist the characterization of the data. The visual interface is operated by analysts and decision-makers, to explore and analyze the data. The framework of the Visual Analytics Pipeline has four core concepts: Data, Models, Visualization, and Knowledge. The Data module is responsible for the collection and pre-processing of the raw and heterogeneous data. As data acquisition is done in real-time through sensors, raw data sets are usually incomplete, noisy, or inconsistent making it impossible for them to be used directly in the Visualization or in the Models module. In order to eliminate these difficulties, some data pre-processing has to be applied to the original data sets. Data pre-processing is a flexible process, depending on the quality of raw data. This module includes pre-processing techniques such as data parsing, data integration, data cleaning (elimination of redundancy, errors, and invalid data), data transformation (normalization), and data reduction. The Models module, is responsible for converting data to information. This module includes conversion methods such as feature selection and generation, model building, selection, and validation.

Visual Analytics Pipeline

The Visualization module is responsible for visualizing and abstractly transforming the data. This module includes techniques for visual mapping (parallel coordinates, force-directed graphs, chord graphs, scatter matrices), view generation and coordination, human-computer interaction. The Knowledge module is responsible for driving the process of transforming information into meaningful insight using human machine interaction methods. 3 Visualization Charts Rules and Tools Data visualization places data in an appropriate visual context that triggers people’s understanding of its significance. This reduces the overall effort to manually analyze the data. As a result, visualization and recognition of patterns within the IoT generated data, play a significant role in the insight-gaining process, and enhance the decision-making process. Visualizing data plays a major role in data analytics since it manifests the presentation of findings and its patterns concurrently with the original data. Data visualization helps to interpret the results by correlating the findings to the goals. It also exposes hidden patterns, trends, and correlations, that otherwise would be undetected, in an impactful and perceptible manner. As a result, it assists the creation of good storytelling in terms of data and data pattern understanding. In this section, we will address different types of data charting. Also, we will analyze chart selection rules, that take into account special conditions that hold for a particular use case. Moreover, we will present the most popular IoT visualization tools.

Different Tools For IoT Data Visualization

IoT Data Visualization Tools Visualization tools assist the decision-making process since they provide strong data analytics that help interpreting big data acquired from the various IoT devices. IoT data visualization systems involve custom dashboard design that, given a set of measurements acquired by several geographically scattered IoT sensors, and several AI models applied to the data, allows the operator to explore the available raw measurements and gain insight about the models’ operation. The main aim of these systems is to enhance the operator’s trust in the models. A flexible visualization system should maintain some core characteristics such as the ability to update in real-time, interactivity, transparency, and explainability. Since, IoT measurements are highly dynamic, with new measurements being collected in real-time, dashboards should be able to update in real-time as new measurements become available. The dashboard should provide an interactive user interface allowing operators to engage with the data and explore them. The dashboard should also provide means of looking into the applied AI models and visualize their internals, to enhance the transparency and explainability of the models. Many proposed visualization platforms are designed based on SOA (Service Oriented Architecture) with four key services: Data Collection Service, that receives data; Data Visualization Service, that observes the data intuitively; Dynamic Dashboard Service, providing an interface that organizes and displays various information such as text, the value of the machine, or the visualization result; and Data Analytic Service, that delivers statistical analysis tools and consists of three main layers Big Data Infrastructure as a Service, Big Data Platform as a Service, and Big Data Analytics Software as a Service. The most widely used IoT Data visualization tools, across several industries globally, will be summarized in this section. Each one was compared against the following criteria: open-source tool, the ability to integrate with popular data sources (MapR Hadoop Hive, Salesforce, Google Analytics, Cloudera Hadoop, etc.), interactive visualization, client-type (desktop, online or mobile app), availability of APIs for customization and embedding purposes.

Tableau is a fast and flexible data visualization tool, allowing user interaction. Its user interface provides a wide range of fixed and custom visualizations employing a great variety of intuitive charts. In-depth analyses may be accomplished by R-scripting. It supports most data formats and connections to various servers such as Amazon Aurora, Cloudera Hadoop, and Salesforce. Tableau’s online service is publicly available but it supports limited storage. Server and desktop versions are available under commercial licenses. ThingsBoard is an open-source IoT platform containing modules for device management, data collection, processing, and visualization. The platform allows the creation of custom IoT dashboards containing widgets that visualize sensor data collected through multiple devices. It contains a set of features including line and bar chart modules for both historical and real-time data visualizations. It also contains map widgets enabling object tracking on online maps. Its complex stack technology (Java, Python, C++, JavaScript) provides error-free performance and real-time data analytics. It supports standard IoT protocols for device connectivity (e.g. MQTT, CoAP, and HTTP). It can be integrated with Node-Red, a flow-based programming platform for IoT, through a custom function. Plotly is an online cloud-based public data visualization service. It is built using Python and Django frameworks. It provides various data storage services and modules for IoT visualization and analytics. It allows the creation of online dashboards employing a wide range of charts such as statistical, scientific, 3D, multiple axes charts, etc. It provides Python, R, MATLAB and Julia based APIs for in-depth analyses. Also, graphics libraries such as ggplot2, matplotlib, and MATLAB chart conversion techniques enhance the visualizations. Its internal tool Web Plot Digitizer (WPD) may automatically grab data from static images. It is publicly available with limited chart features and storage while its full set of chart features are available through a professional membership license. IBM Watson IoT Platform is a cloud platform as a service supporting several programming languages, services, and integrated DevOps in order to deploy and manage cloud applications. It features a set of built-in web applications while it provides support for 3rd-party software integration via REST APIs. The visualization of static and dynamic data is provided through effortless creation of custom diagrams, graphs, and tables. It provides access to device properties and alert management. Node-RED may be used for IoT device connection, APIs, and online services. Sensor data, stored in Cloudant NoSQL DB, may be processed for further data analysis. Power BI is a powerful business analytics service based on the cloud. It provides a rich set of interactive visualizations and detailed analysis reports for large enterprises. It is designed to trace and visualize various sensor gathered data. The platform works in cooperation with Azure cloud-based analytics and cognitive services. It consists of 3 basic components: Power BI Desktop, report generator; Service (SaaS), report publisher; and Apps, report viewer, and dashboard. Numerous types of source integrations are supported while rich data visualizations are also provided. Among other methods, data may be queried using the natural language query feature. Data analysis is accomplished both in real-time streaming and static historic data. Power BI provides sub-components that enable IoT integration.

These days, Immersive Virtual Reality is recognized as one of the most promising technologies that enables virtual interactions with physical systems. The user is situated within a 3D environment where data visualizations and physical space are matched in a sense that it provides users the ability to orient, navigate, and interact naturally. These frameworks utilize hybrid collaborative multi-modal methods to enable collaboration between users and provide intuitive and natural interaction within a specific virtual environment. As users remain immersed within a 3D virtual environment, immersive reality applications require sophisticated approaches for interacting with the IoT data analytics visualization. As such, immersive analytics is the visualization outcome within IoT infrastructures. Immersive analytics frameworks promote a better understanding of the IoT Services and enhance decision-making. To ensure such a collaborative virtual environment presupposes highly responsive connectivity that may be accomplished by employing high-speed 5G network infrastructures, which provide ultra-low-delay and ultra-high-reliable communications. Similarly, a Cyber-Physical System (CPS) is a set of physical devices, connected through a communication network, that communicates with its virtual cyberspace. Each physical object is associated with a cyber model that stores all information and knowledge of it. This cyber model is called “Digital Twin”. It allows data transfer from the physical to the cyber part. However, in a specific CPS, where every physical object has a digital twin counterpart, the spatiotemporal relations between the individual digital twins are far more valuable than the actual digital twin. The generation of Digital twins may be accomplished using 3D technologies through AR/VR/MR or even hologram devices. Digital twins integrate various technologies such as Haptics, Humanoid Robotics as well as Soft Robotics, 5G and Tactile Internet, Cloud Computing Offloading, Wearable technology, IoT Services, and AI.

IoT domains and Visualization

IoT technologies have already entered into various significant domains of our life. The growing market competition and inexpensive connectivity have emerged the Internet of things (IoT) across many domains. Connected sensors, devices, and machines via the Internet are the “things” in IoT. The enormous volume of IoT data provides the information needed to be analyzed to gain knowledge. Visual analytics, involving data analysis methods, artificial intelligence, and visualization, aims to improve domain operations with concerning efficiency, flexibility, and safety. The employment of IoT smart devices facilitates the transformation of traditional domains into modern, smart, and autonomous domains. Over the past recent years, many traditional domains such as healthcare, energy, industry, transportation, city and building management, and agriculture have become IoT-based with intelligent human-to-machine (H2M) and machine-to-machine (M2M) communication.

Challenges and Future Work

Visual analytics main objective is to discover knowledge and produce actionable insight. This is succeeded by processing large and complex data sets through by integrating techniques from various fields such as data analysis, data management, visualization, knowledge discovery, analytical reasoning, human perception, and human-computer interaction. Even though visualization is an important entity in Big IoT data analytics, most visualization tools exhibit poor performance results in terms of functionality, scalability, interaction, infrastructure, insight creation, and evaluation.

Conclusion

The emergence of IoT Services increased drastically the growth rate of data production creating large and complex data sets. The integration of human judgment within the data analysis process enables visual analytics in discovering knowledge and gaining valuable insight from these data sets. In this process, every piece of IoT data is considered crucial for the extraction of information and useful patterns. Human cognitive and perceptual capabilities identify patterns efficiently when data is represented visually. Data visualization methods face several challenges in handling the voluminous and streaming IoT data without compromising performance and response time matters.

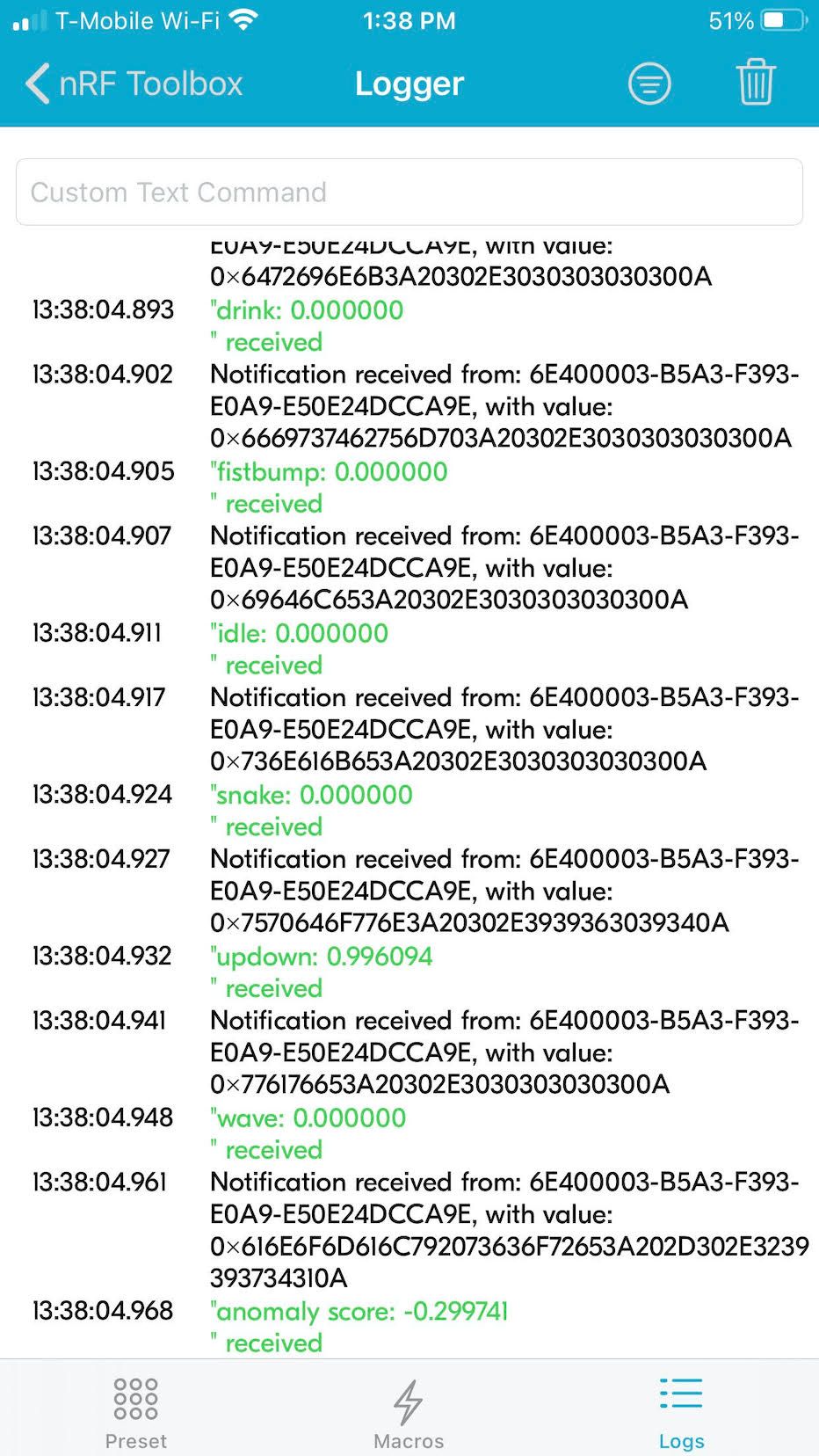

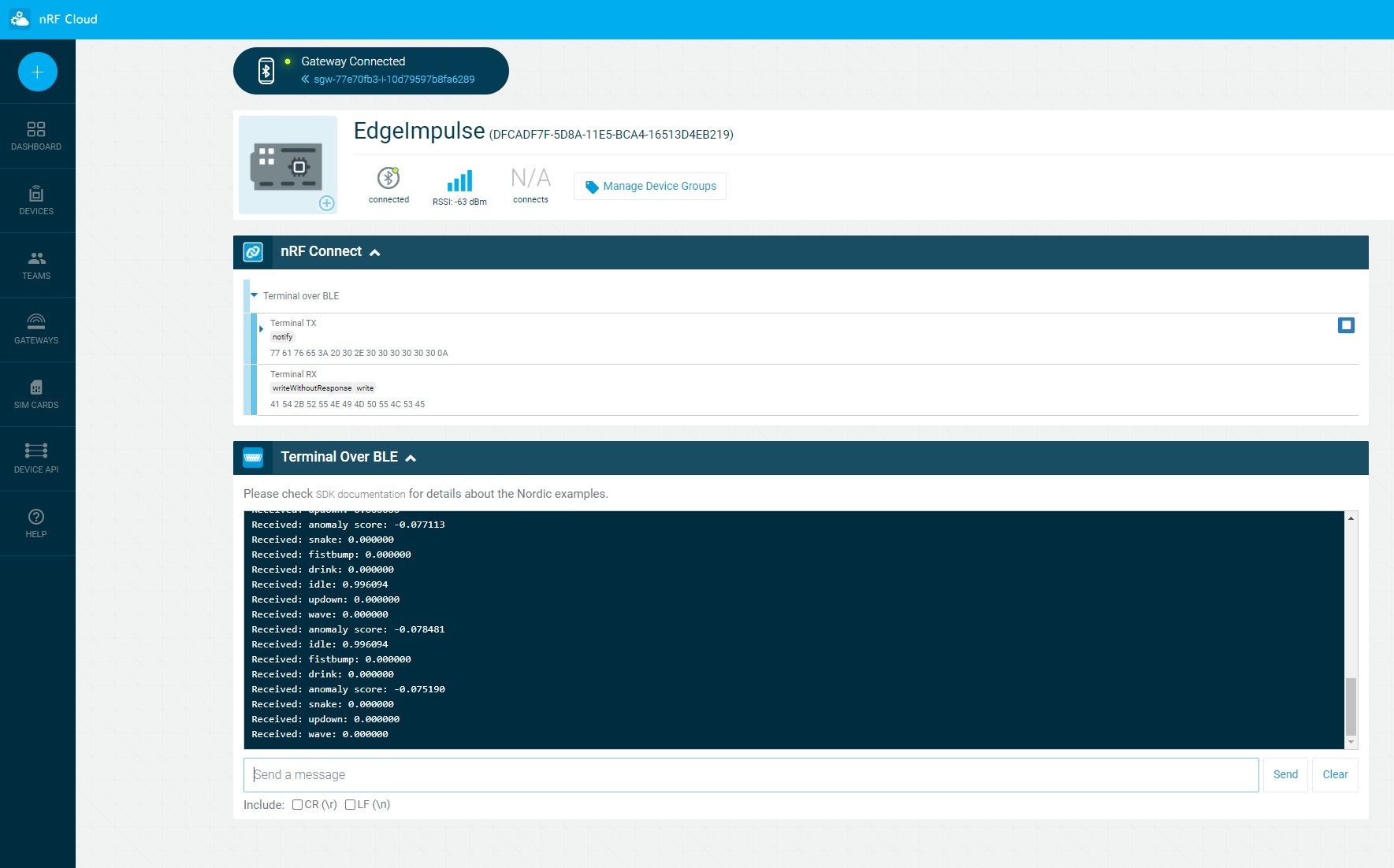

Figure 3. Classification Output Shown in nRF Cloud

Figure 3. Classification Output Shown in nRF Cloud