Against the backdrop of digital technology and the industrial revolution, the Internet of Things has become the most influential and disruptive of all the latest technologies. As an advanced technology, IoT is showing a palpable difference in how businesses operate.

Although the Fourth Industrial Revolution is still in its infancy, early adopters of this advanced technology are edging out the competition with their competitive advantage.

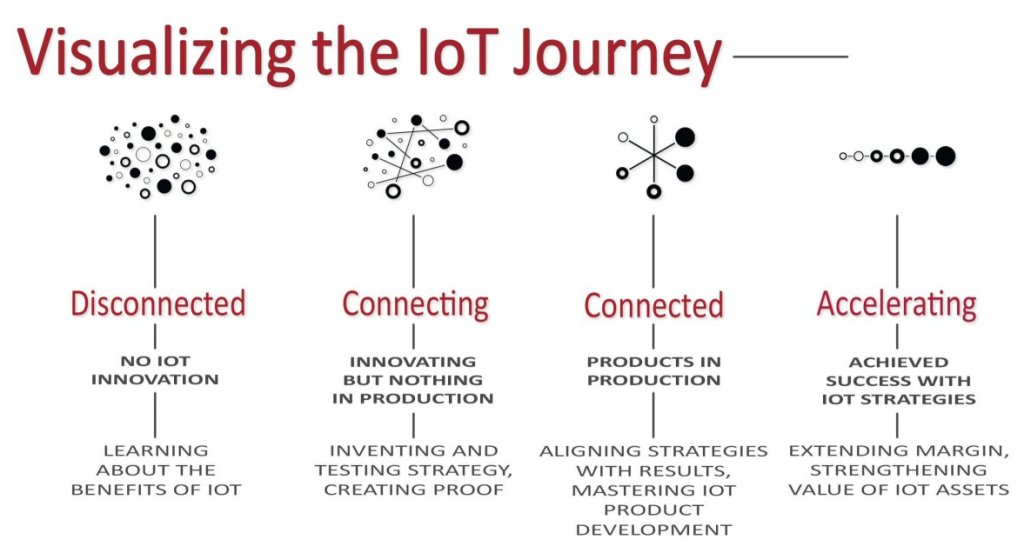

Businesses eager to become a part of this disruptive technology are jostling against each other to implement IoT solutions. Yet, they are unaware of the steps in effective implementation and the challenges they might face during the process.

This is a complete guide– the only one you’ll need – that focuses on delivering effective and uncomplicated IoT implementation.

Key Elements of IoT

There are three main elements of IoT technology:

IoT devices are connected to the internet and have a URI – Unique Resource Identifier – that can relay data to the connected network. The devices can be connected among themselves to a centralized server, a cloud, or a network of servers.

IoT devices continuously share data with other devices in the network or the server.

IoT devices do not simply gather data. They transmit it to their endpoints or server. There is no point in collecting data if it is not put to good use. The collected data is used to deliver IoT smart solutions in automation, take real-time business decisions, formulate strategies, or monitor processes.

How Does IoT work?

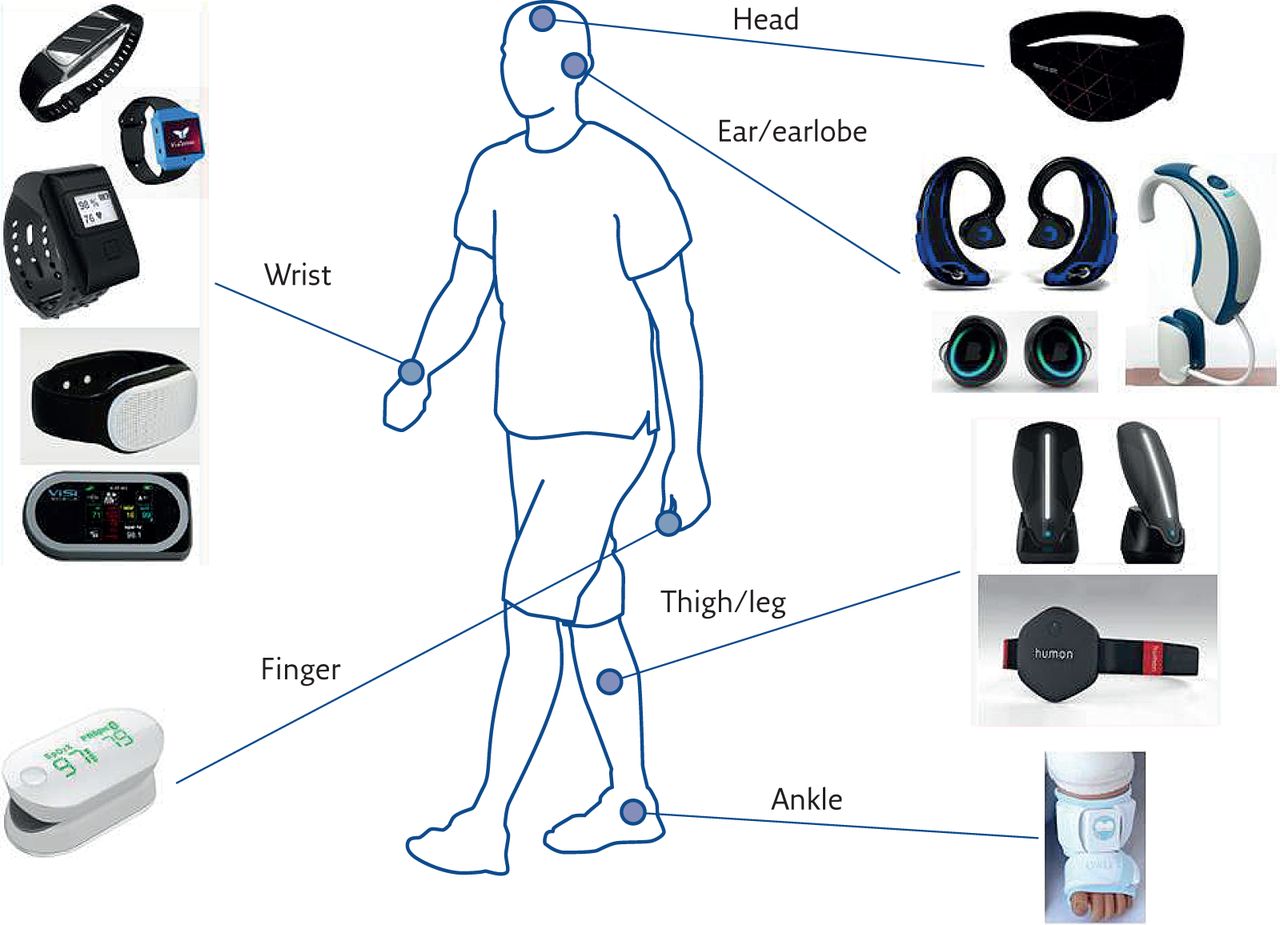

IoT devices have URI and come with embedded sensors. With these sensors, the devices sense their environment and gather information. For example, the devices could be air conditioners, smart watches, cars, etc. Then, all the devices dump their collected data into the IoT platform or gateway.

The IoT platform then performs analytics on the data from various sources and derives useful information per the requirement.

What are the Layers in IoT Architecture?

Although there isn’t a standard IoT structure that’s universally accepted, the 4-layer architecture is considered to be the basic form. The four layers include perception, network, middleware, and application.

Perception is the first or the physical layer of IoT architecture. All the sensors, edge devices, and actuators gather useful information based on the project needs in this layer. The purpose of this layer is to gather data and transfer it to the next layer.

It is the connecting layer between perception and application. This layer gathers information from the perception and transmits the data to other devices or servers.

The middleware layer offers storage and processing capabilities. It stores the incoming data and applies appropriate analytics based on requirements.

The user interacts with the application layer, responsible for taking specific services to the end-user.

Implementation Requirements

Effective and seamless implementation of IoT depends on specific tools, such as:

Security is one of the fundamental IoT implementation requirements. Since the IoT devices gather real-time sensitive data about the environment, it is critical to put in place high-level security measures that ensure that sensitive information stays protected and confidential.

Asset management includes the software, hardware, and processes that ensure that the devices are registered, upgraded, secured, and well-managed.

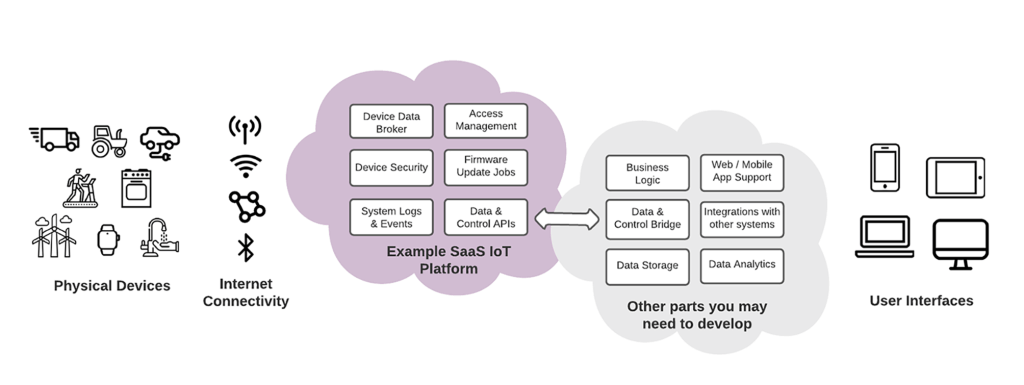

Since massive amounts of structured and unstructured data are gathered and processed, it is stored in the cloud. The cloud acts as a centralized repository of resources that allows the data to be accessed easily. Cloud computing ensures seamless communication between various IoT devices.

With advanced algorithms, large amounts of data are processed and analyzed from the cloud platform. As a result, you can derive trends based on the analytics, and corrective action can be taken.

What are the IoT Implementation Steps?

Knowing the appropriate IoT implementation steps will help your business align your goals and expectations against the solution. You can also ensure the entire process is time-bound, cost-efficient, and satisfies all your business needs.

Set Business Objectives

IoT implementation should serve your business goals and objectives. Unfortunately, not every entrepreneur is an accomplished technician or computer-savvy. You can hire experts if you lack the practical know-how regarding IoT, the components needed, and specialist knowledge.

Think of what you will accomplish with IoT, such as improving customer experience, eliminating operational inconsistencies, reducing costs, etc. With a clear understanding of IoT technology, you should be able to align your business needs to IoT applications.

Hardware Components and Tools

Selecting the necessary tools, components, hardware, and software systems needed for the implementation is the next critical step. First, you must choose the tools and technology, keeping in mind connectivity and interoperability.

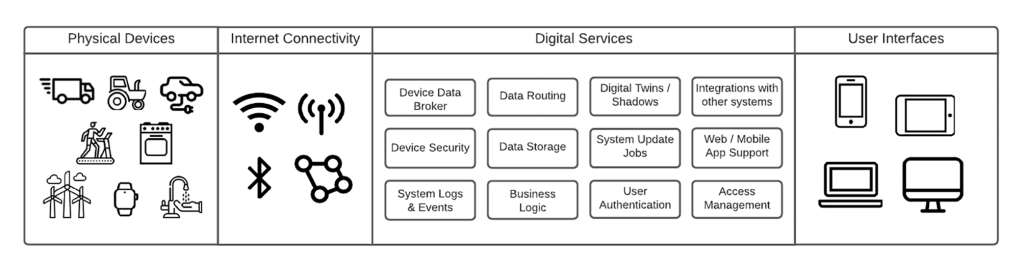

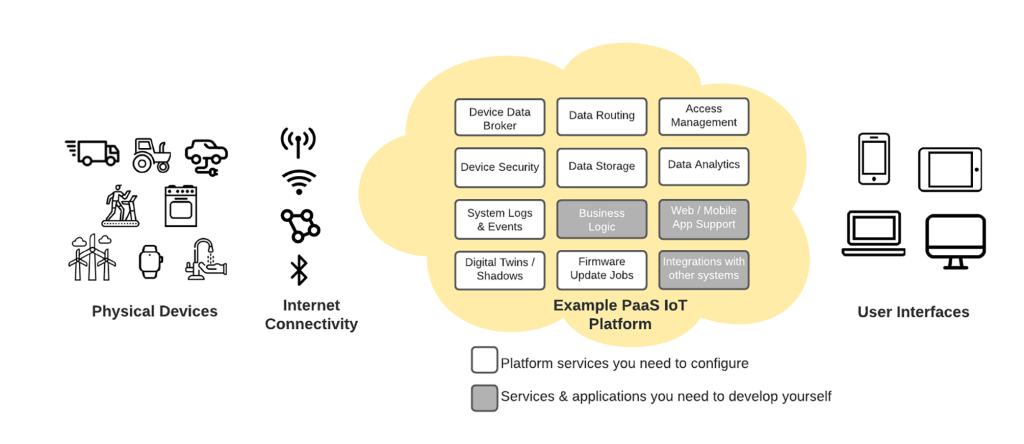

You should also select the right IoT platform that acts as a centralized repository for collecting and controlling all aspects of the network and devices. You can choose to have a custom-made platform or get one from suppliers.

Some of the major components you require for implementation include,

- Sensors

- Gateways

- Communication protocols

- IoT platforms

- Analytics and data management software

Implementation

Before initiating the implementation process, it is recommended that you put together a team of IoT experts and professionals with selected use case experience and knowledge. Make sure that the team comprises experts from operations and IT with a specific skill set in IoT.

A typical team should be experts with skills in mechanical engineering, embedded system design, electrical and industrial design, technical expertise, and front/back-end development.

Prototyping

Before giving the go-ahead, the team must develop an Internet of Things implementation prototype.

A prototype will help you experiment and identify fault lines, connectivity, and compatibility issues. After testing the prototype, you can include modified design ideas.

Integrate with Advanced technologies

After the sensors gather useful data, you can add layers of other technologies such as analytics, edge computing, and machine learning.

The amount of unstructured data collected by the sensors far exceeds structured data. However, both structured and unstructured, machine learning, deep learning neural systems, and cognitive computing technologies can be used for improvement.

Take Security Measures

Security is one of the top concerns of most businesses. With IoT depending predominantly on the internet for functioning, it is prone to security attacks. However, communication protocols, endpoint security, encryption, and access control management can minimize security breaches.

Although there are no standardized IoT implementation steps, most projects follow these processes. But the exact sequence of IoT implementation depends on your project’s specific needs.

Challenges in IoT Implementation

Every new technology comes with its own set of implementation challenges.

When you keep these challenges of IoT implementation in mind, you’ll be better equipped to handle them.

When your entire system is dependent on the network connectivity for functioning, you are just adding another layer of security concern to deal with.

Unless you have a robust network security system, you are bound to face issues such as hacking into the servers or devices. Unfortunately, the IoT hacking statistics are rising, with over 1.5 million security breaches reported in 2021 alone.

-

Data Retention and Storage

IoT devices continually gather data, and over time the data becomes unwieldy to handle. Such massive amounts of data need high-capacity storage units and advanced IoT analytics technologies.

IoT implementation involves several sensors, devices, and tools, and a successful implementation largely depends on the seamless integration between these systems. In addition, since there are no standards for devices or protocols, there could be major compatibility issues during implementation.

IoT is the latest technology that is delivering promising results. Yet, similar to any technology, without proper implementation, your businesses can’t hope to leverage its immense benefits.

Taking chances with IoT implementation is not a smart business move, as your productivity, security, customer experience, and future depend on proper and effective implementation. The only way to harness this technology would be to seek a reliable IoT app development company that can take your initiatives towards success.