Featured Posts (677)

A scientist from Russia has developed a new neural network architecture and tested its learning ability on the recognition of handwritten digits. The intelligence of the network was amplified by chaos, and the classification accuracy reached 96.3%. The network can be used in microcontrollers with a small amount of RAM and embedded in such household items as shoes or refrigerators, making them 'smart.' The study was published in Electronics.

Today, the search for new neural networks that can operate on microcontrollers with a small amount of random access memory (RAM) is of particular importance. For comparison, in ordinary modern computers, random access memory is calculated in gigabytes. Although microcontrollers possess significantly less processing power than laptops and smartphones, they are smaller and can be interfaced with household items. Smart doors, refrigerators, shoes, glasses, kettles and coffee makers create the foundation for so-called ambient intelligece. The term denotes an environment of interconnected smart devices.

An example of ambient intelligence is a smart home. The devices with limited memory are not able to store a large number of keys for secure data transfer and arrays of neural network settings. It prevents the introduction of artificial intelligence into Internet of Things devices, as they lack the required computing power. However, artificial intelligence would allow smart devices to spend less time on analysis and decision-making, better understand a user and assist them in a friendly manner. Therefore, many new opportunities can arise in the creation of environmental intelligence, for example, in the field of health care.

Andrei Velichko from Petrozavodsk State University, Russia, has created a new neural network architecture that allows efficient use of small volumes of RAM and opens the opportunities for the introduction of low-power devices to the Internet of Things. The network, called LogNNet, is a feed-forward neural network in which the signals are directed exclusively from input to output. Its uses deterministic chaotic filters for the incoming signals. The system randomly mixes the input information, but at the same time extracts valuable data from the information that are invisible initially. A similar mechanism is used by reservoir neural networks. To generate chaos, a simple logistic mapping equation is applied, where the next value is calculated based on the previous one. The equation is commonly used in population biology and as an example of a simple equation for calculating a sequence of chaotic values. In this way, the simple equation stores an infinite set of random numbers calculated by the processor, and the network architecture uses them and consumes less RAM.

The scientist tested his neural network on handwritten digit recognition from the MNIST database, which is considered the standard for training neural networks to recognize images. The database contains more than 70,000 handwritten digits. Sixty-thousand of these digits are intended for training the neural network, and another 10,000 for network testing. The more neurons and chaos in the network, the better it recognized images. The maximum accuracy achieved by the network is 96.3%, while the developed architecture uses no more than 29 KB of RAM. In addition, LogNNet demonstrated promising results using very small RAM sizes, in the range of 1-2kB. A miniature controller, Atmega328, can be embedded into a smart door or even a smart insole, has approximately the same amount of memory.

"Thanks to this development, new opportunities for the Internet of Things are opening up, as any device equipped with a low-power miniature controller can be powered with artificial intelligence. In this way, a path is opened for intelligent processing of information on peripheral devices without sending data to cloud services, and it improves the operation of, for example, a smart home. This is an important contribution to the development of IoT technologies, which are actively researched by the scientists of Petrozavodsk State University. In addition, the research outlines an alternative way to investigate the influence of chaos on artificial intelligence," said Andrei Velichko.

Originally posted HERE.

by Russian Science Foundation

Image Credit: Andrei Velichko

Internet of Things (IoT) has revolutionized many industries including inventory management. IoT is a concept where devices are interconnected via the internet. It is expected that by 2020, there will be 26 billion devices connected worldwide. These connections are important because it allows data sharing which then can perform actions to make life and business more efficient. Since inventory is a significant portion of a company’s assets, inventory data is vital for an accounting department for the company’s asset management and annual report.

Inventory solutions based on IoT and RFID, individual inventory item receives an RFID tag. Each tag has a unique identification number (ID) that contains information about an inventory item, e.g. a model, a batch number, etc. these tags are scanned by RF reader. Upon scanning, a reader extracts its IDs and transmits them to the cloud for processing. Along with the tag’s ID, the cloud receives location and the time of reading. This data is used for updates about inventory items’, allowing users to monitor the inventory from anywhere, in real-time.

Industrial IoT

The role of IoT in inventory management is to receive data and turn it into meaningful insights about inventory items’ location, status, and giving users a corresponding output. For example, based on the data, and inventory management solution architecture, we can forecast the number of raw materials needed for the upcoming production cycle. The output of the system can also send an alert if any individual inventory item is lost.

Moreover, IoT based inventory management solutions can be integrated with other systems, i.e. ERP and share data with other departments.

RFID in Industrial IoT

RFID consist of three main components tag, antenna, and a reader

Tags: An RFID tag carries information about a specific object. It can be attached to any surface, including raw materials, finished goods, packages, etc.

RFID antennas: An RFID antenna receives signals to supply power and data for tags’ operation

RFID readers: An RFID reader, uses radio signals to read and write to the tags. The reader receives data stored in the tag and transmits it to the cloud.

Benefits of IoT in inventory management

The benefits of IoT on the supply chain are the most exciting physical manifestations we can observe. IoT in the supply chain creates unparalleled transparency that increases efficiencies.

Inventory tracking

The major benefit of inventory management is asset tracking, instead of using barcodes to scan and record data, items have RFID tags which can be registered wirelessly. It is possible to accurately obtain data and track items from any point in the supply chain.

With RFID and IoT, managers don’t have to spend time on manual tracking and reporting on spreadsheets. Each item is tracked and the data about it is recorded automatically. Automated asset tracking and reporting save time and reduce the probability of human error.

Inventory optimization

Real-time data about the quantity and the location of the inventory, manufacturers can reduce the amount of inventory on hand while meeting the needs of the customers at the end of the supply chain.

The data about the amount of available inventory and machine learning can forecast the required inventory which allows manufacturers to reduce the lead time.

Remote tracking

Remote product tracking makes it easy to have an eye on production and business. Knowing production and transit times, allows you to better tweak orders to suit lead times and in response to fluctuating demand. It shows which suppliers are meeting production and shipping criteria and which needs monitoring for the required outcome.

It gives visibility into the flow of raw materials, work-in-progress and finished goods by providing updates about the status and location of the items so that inventory managers see when an individual item enters or leaves a specific location.

Bottlenecks in the operations

With the real-time data about the location and the quantity, manufacturers can reveal bottlenecks in the process and pinpoint the machine with lower utilization rates. For instance, if part of the inventory tends to pile up in front of a machine, a manufacturer assumes that the machine is underutilized and needs to be seen to.

The Outcomes

The data collected by inventory management is more accurate and up-to-date. By reducing these time delays, the manufacturing process can enhance accuracy and reduce wastage. An IoT-based inventory management solution offers complete visibility on inventory by providing real-time information fetched by RFID tags. It helps to track the exact location of raw materials, work-in-progress and finished goods. As a result, manufacturers can balance the amount of on-hand inventory, increase the utilization of machines, reduce lead time, and thus, avoid costs bound to the less effective methods. This is all about optimizing inventory and ensuring anything ordered can be sold through whatever channel necessary.

Originally posted here

After so many years evangelizing the Internet of Things (IoT) or developing IoT products or selling IoT services or using IoT technologies, it is hard to believe that today there are as many defenders as detractors of these technologies. Why does the doubt still assail us: "Believe or Not Believe in the IoT"? What's the reason we keep saying every year that the time for IoT is finally now?

It does not seem strange to you that if we have already experienced the power of change that involves having connected devices in ourselves (wearables), in our homes, in cities, in transportation, in business, we continue with so many non-believers. Maybe, because the expectations in 2013 were so great that now in 2020 we need more tangible and realistic data and facts to continue believing.

In recent months I have had more time to review my articles and some white papers and I think I have found some reasons to continue believing, but also reasons not to believe.

Here below there are some of these reasons for you to decide where to position yourself.

Top reasons to believe

- Mackinsey continue presenting us new opportunities with IoT

- If in 2015 “Internet of Things: Mapping the value beyond the hype” the company estimated a potential economic impact as much as 11,1 US trillions per year in 2025 for IoT applications in 9 settings.

- In 2019 “Growing opportunities in the Internet of Things” they said that “The number of businesses that use the IoT technologies has increased from 13 percent in 2014 to about 25 percent today. And the worldwide number of IoT connected devices is projected to increase to 43 billion by 2023, an almost threefold increase from 2018.”

- Gartner in 2019 predicted that by 2021, there will be over 25 Billion live IoT endpoints that will allow unlimited number of IoT use cases.

- Harbor Research considers that the market opportunity for industrial internet of things (IIoT) and industry 4.0 is still emergent.

- Solutions are not completely new but are evolving from the convergence of existing technologies; creative combinations of these technologies will drive many new growth opportunities;

- As integration and interoperability across the industrial technology “stack” relies on classic IT principles like open architectures, many leading IT players are entering the industrial arena;

- IoT regulation is coming - The lack of regulation is one of the biggest issues associated with IoT devices, but things are starting to change in that regard as well. The U.S. government was among the first to take the threat posed by unsecured IoT devices seriously, introducing several IoT-related bills in Congress over the last couple of years. It all began with the IoT Cybersecurity Improvement Act of 2017, which set minimum security standards for connected devices obtained by the government. This legislation was followed by the SMART IoT Act, which tasked the Department of Commerce with conducting a study of the current IoT industry in the United States.

- Synergy of IoT and AI - IoT supported by artificial intelligence enhances considerably the success in a large repertory of every-day applications with dominant one’s enterprise, transportation, robotics, industrial, and automation systems applications.

- Believe in superpowers again, thanks to IoT - Today, IoT sensors are everywhere – in your car, in electronic appliances, in traffic lights, even probably on the pigeon outside your window (it’s true, it happened in London!). IoT sensors will help cities map air quality, identify high-pollution pockets, trigger alerts if pollution levels rise dangerously, while tracking changes over time and taking preventive measures to correct the situation. thanks to IoT, connected cars will now communicate seamlessly with IoT sensors and find empty parking spots easily. Sensors in your car will also communicate with your GPS and the manufacturer’s system, making maintenance and driving a breeze!. City sensors will identify high-traffic areas and regulate traffic flows by updating your GPS with alternate routes. These IoT sensors can also identify and repair broken street lamps. IoT will be our knight in shining, super-strong metallic armor and prevent accidents like floods, fires and even road accidents, by simply monitoring fatigue levels of truck drivers!. Washing machines, refrigerators, air-conditioners will now self-monitor their usage, performance, servicing requirements, while triggering alerts before potential breakdowns and optimizing performance with automatic software updates. IoT sensors will now help medical professional monitor pulse rates, blood pressure and other vitals more efficiently, while triggering alerts in case of emergencies. Soon, Nano sensors in smart pills will make healthcare super-personalized and 10x more efficient!

Top reasons not to believe

- Three fourths of IoT projects failing globally. Government and enterprises across the globe are rolling out Internet of Things (IoT) projects but almost three-fourths of them fail, impacted by factors like culture and leadership, according to US tech giant Cisco (2017). Businesses are spending $745 billion worldwide on IoT hardware and software in 2019 alone. Yet, three out of every four IoT implementations are failing.

- Few IoT projects survive proof-of-concept stage - About 60% of IoT initiatives get stalled at the Proof of Concept (PoC) stage. If the right steps aren’t taken in the beginning, say you don’t think far enough beyond the IT infrastructure, you end up in limbo: caught between the dream of what IoT could do for your business and the reality of today’s ROI. That spot is called proof-of-concept (POC) purgatory.

- IoT Security still a big concern - The 2019 annual report of SonicWall Caoture Labs threat researchers analyzing data from over 200,000 malicious events indicated that 217.5 percent increase in IoT attacks in 2018.

- There are several obstacles companies face both in calculating and realizing ROI from IoT. Very few companies can quantify the current, pre-IoT costs. The instinct is often to stop after calculating the cost impact on the layer of operations immediately adjacent to the potential IoT project. For example, when quantifying the baseline cost of reactive (versus predictive or prescriptive) maintenance, too many companies would only include down time for unexpected outages, but may not consider reduced life of the machine, maintenance overtime, lost sales due to long lead times, supply chain volatility risk for spare parts, and the list goes on.

- Privacy, And No, That’s Not the Same as Security. The Big Corporations don’t expect to make a big profit on the devices themselves. the Big Money in IoT is in Big Data. And enterprises and consumers do not want to expose everything sensors are learning about your company or you.

- No Killer Application – I suggest to read my article “Worth it waste your time searching the Killer IoT Application?"

- No Interoperable Technology ecosystems - We have a plethora of IoT vendors, both large and small, jumping into the fray and trying to establish a foothold, in hopes of either creating their own ecosystem (for the startups) or extending their existing one (for the behemoths).

- Digital Fatigue – It is not enough for us to try to explain IoT, that now more technologies such as Artificial Intelligence, Blockchain, 5G, AR / VR are joining the party and of course companies say enough.

You have the last word

We can go on forever looking for reasons to believe or not believe in IoT but we cannot continue to deny the evidence that the millions of connected devices already out there and the millions that will soon be waiting for us to exploit their full potential.

I still believe. But you have the last word.

Thanks in advance for your Likes and Shares

By: Tom Jeltes, Eindhoven University of Technology

The Internet of Things (IoT) consists of billions of sensors and other devices connected to each other via internet, all of which need to be protected against hackers with malicious purposes. A low-cost and energy efficient solution for the security of IoT devices uses the unique characteristics of the built-in memory chips. Ph.D. candidate Lieneke Kusters investigated how to make optimal use of the chip's digital fingerprint to generate a security key.

The higher the number of devices connected to each other via the Internet of Things, the greater the risk that malicious hackers might gain access to important information, or even take over entire systems. Quite apart from all kinds of privacy issues, it's not hard to imagine that that someone who, for example, has control over temperature sensors in a chemical or nuclear plant, could cause serious damage.

Digital fingerprint

There is a different way: namely by deducing the security key from a unique physical characteristic of the memory chip (Static Random-Access Memory, or SRAM) that can be found in practically every IoT device. Depending on the random circumstances during the chip's manufacturing process, the memory locations have a random default value of 0 or 1.

"That binary code which you can read out when activating the chip, constitutes a kind of digital fingerprint of the device," says Kusters, who gained her doctorate at the Information and Communication Theory Laboratory at the TU/e department of Electrical Engineering. This fingerprint is known as a Physical Unclonable Function (PUF). "The Eindhoven-based company Intrinsic ID sells digital security based on SRAM-PUFs. I collaborated with them for my doctoral research, during which I focused on how to generate, in a reliable way, a key from that digital fingerprint that is as long as possible. The longer, the safer."

The major advantage of security keys based on SRAM-PUFs is that the key exists only at the moment when authentication is required. "The device restarts itself to read out the SRAM-PUF and in doing so creates the key, which subsequently gets erased immediately after use. That makes it all but impossible for an attacker to steal the key."

Noise and reliability

But that's not the entire story, because some bits of the SRAM do not always have the same value during activation, Kusters explains. Ten to fifteen percent of the bits turn out not to be determined, which makes the digital fingerprint a bit fuzzy. How do you use that fuzzy fingerprint to make a key of the highest possible complexity that nevertheless still fits into the receiving lock—practically—each time?

"What you want to prevent is that the generated key won't be recognized by the receiving party as a consequence of the 'noise' in the SRAM-PUF," Kusters explains. "It's alright if that happens one in a million times perhaps, preferably less often." The probability of error is smaller with a shorter key, but such a key is also easier to guess for people with bad intentions. "I've searched for the longest reliable key, given a certain amount of noise in the measurement. It helps if you store extra information about the SRAM-PUF, but that must not be of use to a potential attacker. My thesis is an analysis of how you can reach the optimal result in different situations with that extra information."

Originaly posted here.

Scott Rosenthal and I go back about a thousand years; we've worked together, helped midwife the embedded field into being, had some amazing sailing adventures, and recently took a jaunt to the Azores just for the heck of it. Our sons are both big data people; their physics PhDs were perfect entrees into that field, and both now work in the field of artificial intelligence.

At lunch recently we were talking about embedded systems and AI, and Scott posed a thought that has been rattling around in my head since. Could AI replace firmware?

Firmware is a huge problem for our industry. It's hideously expensive. Only highly-skilled people can create it, and there are too few of us.

What if an AI engine of some sort could be dumped into a microcontroller and the "software" then created by training that AI? If that were possible - and that's a big "if" - then it might be possible to achieve what was hoped for when COBOL was invented: programmers would no longer be needed as domain experts could do the work. That didn't pan out for COBOL; the industry learned that accountants couldn't code. Though the language was much more friendly than the assembly it replaced, it still required serious development skills.

But with AI, could a domain expert train an inference engine?

Consider a robot: a "home economics" major could create scenarios of stacking dishes from a dishwasher. Maybe these would be in the form of videos, which were then fed to the AI engine as it tuned the weighting coefficients to achieve what the home ec expert deems worthy goals.

My first objection to this idea was that these sorts of systems have physical constraints. With firmware I'd write code to sample limit switches so the motors would turn off if at an end-of-motion extreme. During training an AI-based system would try and drive the motors into all kinds of crazy positions, banging destructively into stops. But think how a child learns: a parent encourages experimentation but prevents the youngster from self-harm. Maybe that's the role of the future developer training an AI. Or perhaps the training will be done on a simulator of some sort where nothing can go horribly wrong.

Taking this further, a domain expert could define the desired inputs and outputs, and then a poorly-paid person do the actual training. CEOs will love that. With that model a strange parallel emerges to computation a century ago: before the computer age "computers" were people doing simple math to create tables of logs, trig, ballistics, etc. A room full all labored at a problem. They weren't particularly skilled, didn't make much, but did the rote work under the direction of one master. Maybe AI trainers will be somewhat like that.

Like we outsource clothing manufacturing to Bangladesh, I could see training, basically grunt work, being sent overseas as well.

I'm not wild about this idea as it means we'd have an IoT of idiots: billions of AI-powered machines where no one really knows how they work. They've been well-trained but what happens when there's a corner case?

And most of the AI literature I read suggests that inference successes of 97% or so are the norm. That might be fine for classifying faces, but a 3% failure rate of a safety-critical system is a disaster. And the same rate for less-critical systems like factory controllers would also be completely unacceptable.

But the idea is intriguing.

Original post can be viewed here

Feel free to email me with comments.

Back to Jack's blog index page.

Hardware

SoC

A System on Chip (SoC), is essentially an integrated circuit that takes a single platform and integrates an entire computer system onto it. It combines the power of the CPU with other components that it needs to perform and execute its functions. It is in charge of using the other hardware and running your software. The main advantage of SoC includes lower latency and power saving.

It is made of various building blocks:

- Core + Caches + MMU – An SoC has a processor at its core which will define its functions. Normally, an SoC has multiple processor cores. For a “real” processor, e.g. ARM Cortex-A9. It’s the main thing kept in mind while choosing an SoC. Maybe co-adjuvanted by e.g. a SIMD co-processor like NEON.

- Internal RAM – IRAM is composed of very high-speed SRAM located alongside the CPU. It acts similar to a CPU cache, and generally very small. It is used in the first phase of the boot sequence.

- Peripherals – These can be a simple ADC, DSP, or a Graphical Processing Unit which is connected via some bus to the Core. A low power/real-time co-processor helps the main Core with real-time tasks or handle low power states. Examples of such IP cores are USB, PCI-E, SGX, etc.

External RAM

An SoC uses RAM to store temporary data during and after bootstrap. It is the memory an embedded system uses during regular operation.

Non-Volatile Memory

In an Embedded system or single-board computer, it is the SD card. In other cases, it can be a NAND, NOR, or SPI Data flash memory. It is the source of data the SoC reads and stores all the software components needed for the system to work.

External Peripherals

An SoC must have external interfaces for standard communication protocols such as USB, Ethernet, and HDMI. It also includes wireless technology protocols of Wi-Fi and Bluetooth.

Software

https://www.tirichlabs.com/storage/2020/09/Second-Article-01-300x169.jpg 300w, https://www.tirichlabs.com/storage/2020/09/Second-Article-01-768x432.jpg 768w, https://www.tirichlabs.com/storage/2020/09/Second-Article-01-1200x675.jpg 1200w" alt="" />

https://www.tirichlabs.com/storage/2020/09/Second-Article-01-300x169.jpg 300w, https://www.tirichlabs.com/storage/2020/09/Second-Article-01-768x432.jpg 768w, https://www.tirichlabs.com/storage/2020/09/Second-Article-01-1200x675.jpg 1200w" alt="" />

First of all, we introduce the boot chain which is the series of actions that happens when an SoC is powered up.

Boot ROM: It is a piece of code stored in the ROM which is executed by the booting core when it is powered-on. This code contains instructions for the configuration of SoC to allow it to execute applications. The configurations performed by Boot ROM include initialization of the core’s register and stack pointer, enablement of caches and line buffers, programming of interrupt service routine, clock configuration.

Boot ROM also implements a Boot Assist Module (BAM) for downloading an application image from external memories using interfaces like Ethernet, SD/MMC, USB, CAN, UART, etc.

1st stage bootloader

In the first-stage bootloader performs the following

- Setup the memory segments and stack used by the bootloader code

- Reset the disk system

- Display a string “Loading OS…”

- Find the 2nd stage boot loader in the FAT directory

- Read the 2nd stage boot loader image into memory at 1000:0000

- Transfer control to the second-stage bootloader

It copies the Boot ROM into the SoC’s internal RAM. Must be tiny enough to fit that memory usually well under 100kB. It initializes the External RAM and the SoC’s external memory interface, as well as other peripherals that may be of interest (e.g. disable watchdog timers). Once done, it executes the next stage, depending on the context, which could be called MLO, SPL, or else.

2nd stage bootloader

This is the main bootloader and can be 10 times bigger than the 1st stage, it completes the initialization of the relevant peripherals.

- Copy the boot sector to a local memory area

- Find kernel image in the FAT directory

- Read kernel image in memory at 2000:0000

- Reset the disk system

- Enable the A20 line

- Setup interrupt descriptor table at 0000:0000

- Setup the global descriptor table at 0000:0800

- Load the descriptor tables into the CPU

- Switch to protected mode

- Clear the prefetch queue

- Setup protected mode memory segments and stack for use by the kernel code

- Transfer control to the kernel code using a long jump

Linux Kernel

The Linux kernel is the main component of a Linux OS and is the core interface between hardware and processes. It communicates between the hardware and processes, managing resources as efficiently as possible. The kernel performs following jobs

- Memory management: Keep track of memory, how much is used to store what, and where

- Process management: Determine which processes can use the processor, when, and for how long

- Device drivers: Act as an interpreter between the hardware and the processes

- System calls and security: Receive requests for the service from processes

To put the kernel in context, they can be interpreted as a Linux machine as having 3 layers:

- The hardware: The physical machine—the base of the system, made up of memory (RAM) and the processor (CPU), as well as input/output (I/O) devices such as storage, networking, and graphics.

- The Linux kernel: The core of the OS. It is a software residing in memory that tells the CPU what to do.

- User processes: These are the running programs that the kernel manages. User processes are what collectively makeup user space. The kernel allows processes and servers to communicate with each other.

Init and rootfs – init is the first non-Kernel task to be run, and has PID 1. It initializes everything needed to use the system. In production embedded systems, it also starts the main application. In such systems, it is either BusyBox or a custom-crafted application.

View original post here

This complete guide is a 212-page eBook and is a must read for business leaders, product managers and engineers who want to implement, scale and optimize their business with IoT communications.

Whether you want to attempt initial entry into the IoT-sphere, or expand existing deployments, this book can help with your goals, providing deep understanding into all aspects of IoT.

Now more than ever, there are billions of edge products in the world. But without proper cloud computing, making the most of electronic devices that run on Linux or any other OS would not be possible.

And so, a question most people keep asking is which is the best Software-as-a-service platform that can effectively manage edge devices through cloud computing. Well, while edge device management may not be something, the fact that cloud computing space is not fully exploited means there is a lot to do in the cloud space.

Product remote management is especially necessary for the 21st century and beyond. Because of the increasing number of devices connected to the internet of things (IoT), a reliable SaaS platform should, therefore, help with maintaining software glitches from anywhere in the world. From smart homes, stereo speakers, cars, to personal computers, any product that is connected to the internet needs real-time protection from hacking threats such as unlawful access to business or personal data.

Data being the most vital asset is constantly at risk, especially if individuals using edge products do not connect to trusted, reliable, and secure edge device management platforms.

Bridges the Gap Between Complicated Software And End Users

Cloud computing is the new frontier through which SaaS platforms help manage edge devices in real-time. But something even more noteworthy is the increasing number of complicated software that now run edge devices at homes and in workplaces.

Edge device management, therefore, ensures everything runs smoothly. From fixing bugs, running debugging commands to real-time software patch deployment, cloud management of edge products bridges a gap between end-users and complicated software that is becoming the norm these days.

Even more importantly, going beyond physical firewall barriers is a major necessity in remote management of edge devices. A reliable Software-as-a-Service, therefore, ensures data encryption for edge devices is not only hackproof by also accessed by the right people. Moreover, deployment of secure routers and access tools are especially critical in cloud computing when managing edge devices. And so, developers behind successful SaaS platforms do conduct regular security checks over the cloud, design and implement solutions for edge products.

Reliable IT Infrastructure Is Necessary

Software-as-a-service platforms that manage edge devices focus on having a reliable IT infrastructure and centralized systems through which they can conduct cloud computing. It is all about remotely managing edge devices with the help of an IT infrastructure that eliminates challenges such as connectivity latency.

Originally posted here

Introducing Profiler, by Auptimizer: Select the best AI model for your target device — no deployment required.

Profiler is a simulator for profiling the performance of Machine Learning (ML) model scripts. Profiler can be used during both the training and inference stages of the development pipeline. It is particularly useful for evaluating script performance and resource requirements for models and scripts being deployed to edge devices. Profiler is part of Auptimizer. You can get Profiler from the Auptimizer GitHub page or via pip install auptimizer.

Why we built Profiler

The cost of training machine learning models in the cloud has dropped dramatically over the past few years. While this drop has pushed model development to the cloud, there are still important reasons for training, adapting, and deploying models to devices. Performance and security are the big two but cost-savings is also an important consideration as the cost of transferring and storing data, and building models for millions of devices tends to add up. Unsurprisingly, machine learning for edge devices or Edge AI as it is more commonly known continues to become mainstream even as cloud compute becomes cheaper.

Developing models for the edge opens up interesting problems for practitioners.

- Model selection now involves taking into consideration the resource requirements of these models.

- The training-testing cycle becomes longer due to having a device in the loop because the model now needs to be deployed on the device to test its performance. This problem is only magnified when there are multiple target devices.

Currently, there are three ways to shorten the model selection/deployment cycle:

- The use of device-specific simulators that run on the development machine and preclude the need for deployment to the device. Caveat: Simulators are usually not generalizable across devices.

- The use of profilers that are native to the target device. Caveat: They need the model to be deployed to the target device for measurement.

- The use of measures like FLOPS or Multiply-Add (MAC) operations to give approximate measures of resource usage. Caveat: The model itself is only one (sometimes insignificant) part of the entire pipeline (which also includes data loading, augmentation, feature engineering, etc.)

In practice, if you want to pick a model that will run efficiently on your target devices but do not have access to a dedicated simulator, you have to test each model by deploying on all of the target devices.

Profiler helps alleviate these issues. Profiler allows you to simulate, on your development machine, how your training or inference script will perform on a target device. With Profiler, you can understand CPU- and memory-usage as well as run-time for your model script on the target device.

How Profiler works

Profiler encapsulates the model script, its requirements, and corresponding data into a Docker container. It uses user-inputs on compute-, memory-, and framework-constraints to build a corresponding Docker image so the script can run independently and without external dependencies. This image can then easily be scaled and ported to ease future development and deployment. As the model script is executed within the container, Profiler tracks and records various resource utilization statistics including Average CPU Utilization, Memory Usage, Network I/O, and Block I/O. The logger also supports setting the Sample Time to control how frequently Profiler samples utilization statistics from the Docker container.

Get Profiler: Click here

How Profiler helps

Our results show that Profiler can help users build a good estimate of model runtime and memory usage for many popular image/video recognition models. We conducted over 300 experiments across a variety of models (InceptionV3, SqueezeNet, Resnet18, MobileNetV2–0.25x, -0.5x, -0.75x, -1.0x, 3D-SqueezeNet, 3D-ShuffleNetV2–0.25x, -0.5x, -1.0x, -1.5x, -2.0x, 3D-MobileNetV2–0.25x, -0.5x, -0.75x, -1.0x, -2.0x) on three different devices — LG G6 and Samsung S8 phones, and NVIDIA Jetson Nano. You can find the full set of experimental results and more information on how to conduct similar experiments on your devices here.

The addition of Profiler brings Auptimizer closer to the vision of a tool that helps machine learning scientists and engineers build models for edge devices. The hyperparameter optimization (HPO) capabilities of Auptimizer help speed up model discovery. Profiler helps with choosing the right model for deployment. It is particularly useful in the following two scenarios:

- Deciding between models — The ranking of the run-times and memory usages of the model scripts measured using Profiler on the development machine is indicative of their ranking on the target device. For instance, if Model1 is faster than Model2 when measured using Profiler on the development machine, Model1 will be faster than Model2 on the device. This ranking is valid only when the CPU’s are running at full utilization.

- Predicting model script performance on the device — A simple linear relationship relates the run-times and memory usage measured using Profiler on the development machine with the usage measured using a native profiling tool on the target device. In other words, if a model runs in time x when measured using Profiler, it will run approximately in time (a*x+b) on the target device (where a and b can be discovered by profiling a few models on the device with a native profiling tool). The strength of this relationship depends on the architectural similarity between the models but, in general, the models designed for the same task are architecturally similar as they are composed of the same set of layers. This makes Profiler a useful tool for selecting the best suited model.

Looking forward

Profiler continues to evolve. So far, we have tested its efficacy on select mobile- and edge-platforms for running popular image and video recognition models for inference, but there is much more to explore. Profiler might have limitations for certain models or devices and can potentially result in inconsistencies between Profiler outputs and on-device measurements. Our experiment page provides more information on how to best set up your experiment using Profiler and how to interpret potential inconsistencies in results. The exact use case varies from user to user but we believe that Profiler is relevant to anyone deploying models on devices. We hope that Profiler’s estimation capability can enable leaner and faster model development for resource-constrained devices. We’d love to hear (via github) if you use Profiler during deployment.

Originaly posted here

Authors: Samarth Tripathi, Junyao Guo, Vera Serdiukova, Unmesh Kurup, and Mohak Shah — Advanced AI, LG Electronics USA

Why the Nvidia Jetson Nano is responsible for the biggest industrial IoT revolution these days

It feels like yesterday when the Raspberry Pi foundation released the first-in-line Single Board Computer (SBC) to the market. Back in 2012, Raspberry Pi wasn't alone in the SBC growing market, however, it was the first to make a community-based product that brings the hardware and the software eco-system to a beautiful harmony on the internet. Before those days, embedded Linux based SBC's and SOM's were a place for Linux kernel and embedded hardware experts, no easy-to-use tools, ready Linux based distros, or most importantly without the enormous amount of questions and answers across the internet on anything related.

Today, 8 years later, the "2012 revolution" happens again

This time, it took a year to understand the impact of the new 'kid' in the market, but now, there are a few indications that defiantly build the route to a revolution.

The Raspberry Pi was the first to make embedded Linux easy while keeping the advantages of reliability and flexibility in terms of fitting to different kinds of industries applications. It's almost impossible to ignore the variety of industries where Raspberry Pi is in its hurt of products to save time-to-market and costs. The power of this magical board leans on the software side: The Raspberry Pi foundation and their community, worked hard across the years to improve and share their knowledge, but, at the same time, without notice or targeting, they brought the Pi development to an extremely "serverless" level.

The Nvidia Jetson Nano

Let's stop talking about the Raspberry Pi and focus on today's industry needs to understand better why the new kid in the town is here to change the market of IoT and smart products forever.

The market is going forward. AI, Robotics, amazing-looking screen app Gui's, image processing, and long data calculations are all become the new standard of smart edge products.

If a few years ago, you would only want to connect your product to the cloud and receive anything valuable, today, product managers and developers compete in a much tougher industry era. This time, the Raspberry Pi can't be the technology hero again, its resources are limited and the eco-system starts to squint to a better-fit solution.

NVIDIA Jetson devices in Upswift.io device management platform

The Jetson Nano is the first SBC to understood the necessary combination that will drive new products to use it. It's the first SBC designed in the mind of industrial powerful use cases, while not forgetting the prototyping stage and the harmony that gave the Raspberry Pi their success. It's the first solution to bring the whole package for developers and for hardware engineers with a "SaaS" feel: The OS is already perfect thanks to Ubuntu, there is plenty of software instructions by Nvidia and open-source ready-to-use tools custom made for the Jetson family, and for the hardware engineers: they are free to go with the System On Module (SOM) that is connected to a carrier board which includes all the necessary outputs and inputs to make the development stage even faster.

The Jetson Nano combination is basically providing the first world infrastructure for producing a "2020" product with complex software while working in a minimal budget and time-to-market. The Jetson Nano enables developers and product managers to imagine further without compromises, bringing tough software missions to the edge easily.

Originally posted here

by Dan Carroll, Carnegie Mellon University, Department of Civil and Environmental Engineering

Most states in America require passenger vehicles to undergo periodic emissions inspections to preserve air quality by ensuring that a vehicle's exhaust emissions do not exceed standards set at the time the vehicle was manufactured. What some may not know is that the metrics through which emissions are gauged nowadays are usually measured by the car itself through on-board diagnostics (OBD) systems that process all of the vehicle's data. Effectively, these emissions tests are checking whether a vehicle's "check engine light" is on. While over-emitting identified by this system is 87 percent likely to be true, it also has a 50 percent false pass rate of over-emitters when compared to tailpipe testing of actual emissions.

With cars as smart devices increasingly becoming integrated into the Internet of Things (IoT), there's no longer any reason for state and county administrations to force drivers to come in for regular I/M checkups when all the necessary data is stored on their vehicle's OBD. In an attempt to eliminate these unnecessary costs and improve the effectiveness of I/M programs, Acharya, Matthews, and Fischbeck published their recent study in IEEE Transactions on Intelligent Transportation Systems.

Their new method entails sending data directly from the vehicle to a cloud server managed by the state or county within which the driver lives, eliminating the need for them to come in for regular inspections. Instead, the data would be run through machine learning algorithms that identify trends in the data and codes prevalent among over-emitting vehicles. This means that most drivers would never need to report to an inspection site unless their vehicle's data indicates that it's likely over-emitting, at which point they could be contacted to come in for further inspection and maintenance.

Not only has the team's work shown that a significant amount of time and cost could be saved through smarter emissions inspecting programs, but their study has also shown how these methods are more effective. Their model for identifying vehicles likely to be over-emitting was 24 percent more accurate than current OBD systems. This makes it cheaper, less demanding, and more efficient at reducing vehicle emissions.

This study could have major implications for leaders and residents within the 31 states and countless counties across the U.S. where I/M programs are currently in place. As these initiatives face criticism from proponents of both environmental deregulation and fiscal austerity, this team has presented a novel system that promises both significant reductions to cost and demonstrably improved effectiveness in reducing vehicle emissions. Their study may well redefine the testing paradigm for how vehicle emissions are regulated and reduced in America.

Summary: Know How Businesses Are Leveraging Their Business Power with the Help of the Internet of Things (IoT). They Are Paying Attention to It to Enhance Their Business Process and Ensuring Gain Long Term Success for Their Business in This Fiercely Competitive Market.

In this IT era, the latest technology is making its way to our day to day life. It has influenced our life to a great extent and has also affected the way we work. Now we use different gadgets and modern equipment that ease our work and helps us to complete it more smoothly and accurately than ever before. The latest technology like Machine Learning, Big Data Analytics, and Artificial Intelligence has slowly established its command across different industries. Apart from all these technologies one technology that gained significant importance is the internet of things (IoT), it has affected the different areas of various sectors to a great extent.

The use of IoT enabled devices has enhanced the way people live their lives. According to Gartner's prediction, more than 25 billion IoT devices will be present in the market by 2021. The use of IoT will introduce new innovation for businesses, customers, and society.

The potential growth in usage of IoT has resulted in improvement in various sectors like healthcare, education, entertainment, and many more. Now it has become possible to track assert in real-time, monitoring the ups and downs in the human body, home automation, environmental monitoring, etc have become easy and all thanks go to the internet of things (IoT).

Internet of Things: Know Why Businesses Need It for Their Business?

As per the report by Cisco, more than 500 billion devices will be connected with the Internet by 2030. Each device that will be connected by the internet will include sensors that collect data by interacting with the environment and will communicate over a network very accurately.

And all this will become possible through the Internet of Things (IoT) as it's the network of all these connected devices. These smart devices which are developed using this latest technology will generate data that IoT applications use to accomplish various tasks like deliver insight, analyze, aggregate which helps to respond much accurately as per user's actions.

The internet of things is one such latest technology that is continuously improving with each passing second. As this technology connects multiple things with each other, it becomes possible for businesses to get real-time access to all the information on the network and thus it has been proved to be beneficial for them to improve their business processes. It provided multiple benefits to the businesses who adopt it, go through the list of benefits that IoT offers for your business. There are various advantages to explore when it comes to implementing the internet of things for your business.

1. Offers a Large Amount of Data

Almost all businesses these days have realized the power of the internet of things and have started opting for the same for their business. As more and more businesses are stepping ahead to opt for this technology it is predicted that the total market value of IoT will grow rapidly and will reach $3 trillion by 2026.

IoT enabled devices are able to collect huge data from the network with the help of added sensors. This information can be beneficial for businesses as they can easily know what their customers really want from them, how can they fulfill their demands in the best possible way, and much more.

2. Better Customer Service

Every business these days boil down to satisfy their customers and offer the best to them on their demand. The combo of IoT based devices with an app like spoitify can provide quick access to customers' behaviors. It helps businesses to analyze all the data which includes customers' preference, the time they spent on making a particular purchase, the language they prefer, and much more.

All this information can help businesses to enhance their customer support and come up with an advanced solution that satisfies all their needs. Using this information you can diversify your business according to new market trends and grab all the opportunities that come your way.

3. Ability to Monitor and Track Things

IoT enabled devices will allow all businesses to track and monitor each and every activity of their employees. They can easily know what their employees are working, how many tasks they have completed, what progress that has made, and much more. They can even share information with their employees in real-time about the current project on which they are working and can also get information from them whenever they want.

4. Save Money and Resource

There is no doubt that machine to machine communication is growing dramatically in recent years. It is estimated that the total number of M2M connections will grow speedily from 5 billion to 27 billion from 2014 to 2024.

Machines have taken the place of the human in most of the business sector which save a huge amount of money and resources of businesses which they used to spend on human labor. Nowadays work like answering customers' queries, managing accounts, keeping other business records, and much more work in the business environment is performed by the latest application and software that has been developed using the latest technology like the internet of things or any other.

5. Automation

IoT helps businesses to find the best way to make their business process faster and better. They can let them know which areas to be automated so that they can reduce the task of the employees and can save a huge amount of time and resources of their business. If as a business entrepreneur if you feel that your business needs to be automated then IoT will analyze each and every area of your business and will let you know which can be automated and don't need human interaction.

6. Helps to offer Personalized Experiences

As stated above, businesses can get all the information related to their ideal customers with the help of IoT enabled devices. They can know their purchase preferences, likes, dislikes, and much more and can try to provide a personalized experience.

As per New Epsilon research, 80% of consumers like to make a purchase from a particular brand if and only if they offer personalized experiences to them. For example, businesses can develop accurate bills keeping in mind the analyzed IoT data and can provide various discounts and offers to the customers as more than 74% of customers expect that they will get automatic crediting for coupons and loyalty points.

Wonders of the Internet of Things Have a Long Way to Go!

There are certain areas that are still untapped by businesses as they are unable to implement IoT technology in every aspect of their business environment. And even some of the businesses have yet not opted for this modern technology, due to which they are missing various opportunities that are in their success. There are various ways in which IoT works wonders for every business sector. As technology is evolving continually due to research and efforts of brilliant minds, there are certain changes that IoT will have much to offer to the businesses in the nearby future.

When businesses implement the internet of things in their business they will experience enhancement in their employee's productivity, speed, and efficiency which will directly affect the business profit. Hence work on your business niche and find out whether you can implement IoT in your business environment or not. It’s the demand of time to stand out from others and you can do it using IoT, implement this technology in a basic way for your business if possible.

In the era of digitalization, IoT is fostering the upcoming revolution in mobile apps. The ways companies used to provide mobile app development are changing because of IoT. After helping thousands of corporates to deliver extraordinary user experiences, IoT is all set with some new and advanced mobile app development trends.

The tech world is the one that is continuously evolving. Every year and each day, innovations come to light. Each of them is revolutionizing our lives in one or the other ways. From the first wheel to smart cities, humans have come a long way.

The evolution and foundation of smart cities is the result of IoT or the Internet of Things. IoT has definitely stirred quite an uproar in the digital world with the mass potential it has. It can bring everything and everyone online.

As per the latest mobile app stats, IoT will become a more significant player in the mobile app development industry. The market share of IoT is going to increase more than double in 2021 with a staggering amount of 520 billion USD. While four years back in 2017, this number was 235 billion USD.

Soon the IoT mobile app development will face new trends in the coming year and beyond.

Let us take a look at the top IoT mobile app development trends.

IoT App Trend #1: Cybersecurity for IoT

With an increase in the number of devices online, cybersecurity is the top priority for all businesses as IoT gains popularity. The network is expected to expand in the coming years, and so the data volume will also increase. All this draws attention to more information to protect.

IoT security will see an exponential rise as more users will store their data over the cloud. From banking details to home security, everything is easily breached if the security firewall is weak in IoT applications.

Therefore mobile app development companies need to work upon the up-gradation of their IoT enabled mobile apps.

IoT App Trend #2: Roaring Popularity of Smart Home Devices

When smart home devices were launched, many mocked them by calling them unrealistic toys for lazy youngsters. Now, the same people are finding it increasingly difficult to resist the charm of IoT devices.

IoT devices are expected to be very popular in 2021 and the years to come. The reason behind their growing popularity is that the IoT devices are becoming highly intuitive and innovative. They are extended not only to the comfort of home automation but also to home security and the safety of your family.

Another great advantage of implementing smart IoT development adoption is the need to save energy. The intelligent lights or intelligent thermostats help in conserving energy, reducing bills. These reasons will lead to more and more people to adopt smart home devices.

IoT App Trend #3: Backed by AI and ML

Artificial Intelligence and Machine Learning both are thriving technologies. Both of these are the facilitators of automation. We all know how Artificial Intelligence has touched millions of lives around the globe.

Together with IoT, AI and ML are unique data-driven technologies shaping the future of human-machine interactions. The developers set up a combination of IoT and Artificial Intelligence that helps automate the routine tasks, simplifies work, and gets the most accurate information.

IoT App Trend #4: IoT and Healthcare

With the revolution in the health-tech industry, healthcare companies are turning towards mobile platforms. IoT enabled apps to open up new opportunities to improve the medical sector.

IoT has immense applications that are already running in the healthcare field and is expected to increase by 26.2%

Healthcare apps featuring IoT technology are expected to reform the world of medical sciences. These IoT mobile apps can even help doctors and medical professionals treat their patients even from a distance.

Smart wearables and implants will be able to record diverse parameters to keep the patient’s health in check. By integrating sensors, portable devices, and all kinds of medical equipment, real-time updates of a patient’s health can be recorded and sent to the concerned person.

IoT App Trend #5: Edge Computing to Overtake Cloud Computing

This is a change where we have to be careful. For the past many years, IoT devices have been storing their data on cloud storage. However, the IoT developers, development services, and manufacturers have started thinking about the utility of storing, calculating, and analyzing data to the limit.

So basically this means, in place of sending the entire data from IoT devices to the cloud, the data is first transmitted to a local or nearer storage device located close to the IoT device or on the edge of the network.

This local storage device then analyzes, sorts, filters and calculates the data and then sends all or only a part of the data to the cloud, reducing the traffic on the network avoiding any bottleneck situation.

Known as “edge computing”, this approach has several advantages if used correctly. Firstly, it helps in the better management of the large amount of data that each device sends. Second, the reduced dependency on cloud storage allows devices and applications to perform faster and also reduce latency.

Being able to collect and process data locally, the IoT application is expected to consume lesser bandwidth and work even when connectivity to the cloud is affected. After seeing these positive aspects, state-of-the-art computing is looking forward to better innovation and broad adoption in IoT, both consumer and industrial.

Reduced connectivity to the cloud will also result in fewer security costs and facilitate better security practices. 2021 will see better state-of-the-art IT in IoT.

IoT App Trend #6: Are You Excited About Smart Cities?

Well, all of us are super excited to witness smart cities. Smart cities are one of the significant accomplishments of IoT and modernization. Integrated with IoT-powered devices, smart cities promise improved efficiency and security for the common folk on the streets and inside their homes.

With superfast data transfer supported by 5G, public transportation will also see a massive change in the way they work.

By now, we know that IoT will focus on developing smart parking lots, street lights, and traffic controls. To add up to this, with IoT and fast internet, we will live inside a world where our refrigerators will be aware of what food we have inside.

IoT will impact traffic congestion and security. It will also help in the development of sustainable cities leading us to a green future.

IoT App Trend #7: Blockchain for IoT Security

Many financial and governmental institutions, entrepreneurs, consumers as well as industrialists will be decentralized, self-governing, and be quite smart. Most of the new companies are seen building their territory on the entanglement of IOTA to develop modules and other components for firms without the cost of SaaS and Cloud.

IOTA is a distributed ledger especially designed to record and execute transactions between devices in the IoT ecosystem.

If you are in this industry, then you should prepare to see the centralized and monolithic computer models that are separated in the jobs and microservices. All this will be distributed to decentralized machines and devices.

In the coming future, IoT will penetrate the disciplines of health, government, transactions, and others that we cannot think of right now. Such types of IoT technology trends will create significant effective differences.

IoT App Trend #8: IoT for Retail Apps

The eCommerce industry will also get benefited from IoT integration. Retail supply change will be more efficient after the incorporation of IoT mobile apps. It is expected to improve the online shopping experience for individuals across the globe.

Also, IoT will make the retail experience more personalized for each customer with in-app advertisements based on the user’s shopping history. We already get notifications once we purchase a product from a particular eStore. With IoT enabled mobile apps, the app will guide us to our favorite store using in-site maps.

IoT App Trend #9- Will IoT Boost Predictive Maintenance?

Yes, it will. In 2021 and beyond, the smart home system will notify the owner about plumbing leaks, appliance failures, or any other problem so that the house owner can avoid any disaster. Soon these intelligent sensors will enter our houses.

In response to these predictive skills of IoT, we can expect to see home care offers as a contractor service. If there will be a need for any emergency action, your presence in the house will not be necessary.

IoT App Trend #10: Easy and Better Commuting

IoT mobile applications are expected to make commuting easier for students, the elderly, the business person, and many more. Today, due to heavy traffic, commuting is a significant issue for most of us. With major innovations in technology and integration of IoT, mobile applications will make traveling a breeze for everyone.

Here are some of the conventional ways that commuting will change:

- Smart street lights will make walking on the road safe for pedestrians

- Finding parking spaces will be a lot easier and seamless with data-driven parking apps.

- In-app navigation and public transportation will definitely make public transit more reliable

- IoT powered mobile apps will also improve routing between different modes of transfer.

With so many innovative ideas and benefits for iOS and android based IoT mobile apps, the mobile app development market will see an influx of transportation apps in the years to come.

IoT App Trend #11: Sustainable-as-a-Service Becomes the Norm.

While talking about the IoT trends, SaaS or Sustainable-as-a-Service is considered as one of the hot topics for the estimated market. Because of the low cost of entry, SaaS is quickly getting to the top list for being the favorite firm in the IT gaming sector.

Out of these emerging technological IoT trends, Software-as-a-service will make the lives of people better than ever.

IoT App Trend #12- Energy and Resource Management

Do you know what affects energy management the most? Well, energy management majorly depends on the acquisition of a better understanding of how to consume resources. IoT mobile app-based electronics are expected to play a significant role in the conservation of energy.

All of these IoT trends can be integrated into resource management, making lives more accessible, more comfortable, and responsible.

Automatic notifications can also be added to the mobile app in order to send information to the owner in case the power threshold exceeds. Various other fancy features can also be added to these IoT mobile apps such as sprinkler control, in-house temperature management, etc.

Conclusion

We all know that IoT has great potential to bring revolutionary changes in the present mobile app development industry trends. It is expected to open up immense possibilities for every business or individual related to this field. Directly or indirectly, IoT will drive the future of almost every industry.

The above mentioned are some of the trends that will dominate the IoT app development ecosystem in the years to come. Amid all these predictions and trends, the future is promising and worth the wait.

It feels like yesterday when the Raspberry Pi foundation released the first-in-line Single Board Computer (SBC) to the market. Back in 2012, Raspberry Pi wasn't alone in the SBC growing market, however, it was the first to make a community-based product that brings the hardware and the software eco-system to a beautiful harmony on the internet. Before those days, embedded Linux based SBC's and SOM's were a place for Linux kernel and embedded hardware experts, no easy-to-use tools, ready Linux based distros, or most importantly without the enormous amount of questions and answers across the internet on anything related.

Today, 8 years later, the "2012 revolution" happens again

This time, it took a year to understand the impact of the new 'kid' in the market, but now, there are a few indications that defiantly build the route to a revolution.

The Raspberry Pi was the first to make embedded Linux easy while keeping the advantages of reliability and flexibility in terms of fitting to different kinds of industries applications. It's almost impossible to ignore the variety of industries where Raspberry Pi is in its hurt of products to save time-to-market and costs. The power of this magical board leans on the software side: The Raspberry Pi foundation and their community, worked hard across the years to improve and share their knowledge, but, at the same time, without notice or targeting, they brought the Pi development to an extremely "serverless" level.

The Nvidia Jetson Nano

Let's stop talking about the Raspberry Pi and focus on today's industry needs to understand better why the new kid in the town is here to change the market of IoT and smart products forever.

Why do we need to thanks Nvidia and the Jetson Nano?

The market is going forward. AI, Robotics, amazing-looking screen app Gui's, image processing, and long data calculations are all become the new standard of smart edge products.

If a few years ago, you would only want to connect your product to the cloud and receive anything valuable, today, product managers and developers compete in a much tougher industry era. This time, the Raspberry Pi can't be the technology hero again, its resources are limited and the eco-system starts to squint to a better-fit solution.

The Jetson Nano is the first SBC to understood the necessary combination that will drive new prodcuts to use it. It's the first SBC designed in the mind of industrial powerful use cases, while not forgetting the prototyping stage and the harmony that gave the Raspberry Pi their success. It's the first solution to bring the whole package for developers and for hardware engineers with a "SaaS" feel: The OS is already perfect thanks to Ubuntu, there is plenty of software instructions by Nvidia and open-source ready-to-use tools custom made for the Jetson family, and for the hardware engineers: they are free to go with the System On Module (SOM) that is connected to a carrier board which includes all the necessary outputs and inputs to make the development stage even faster.

The Jetson Nano combination is basically providing the first world infrastructure for producing a "2020" product with complex software while working in a minimal budget and time-to-market. The Jetson Nano enables developers and product managers to imagine further without compromises, bringing tough software missions to the edge easily.

by Tirichlabs

Embedded Linux utilizes Linux kernel for an embedded device, but it is quite different from the standard Linux OS. Its application to embedded systems is motivated by the availability of device support, file-systems, network connectivity, and UI support. It is a customized version of Linux for embedded systems, consequently having a much smaller size and minimal features and requires less processing power. Based on embedded system requirements, the Linux kernel is modified and optimized. Such embedded Linux can only run device-specific purpose-built applications.

The Real-Time Operating System (RTOS) with minimal code is used for such applications where least and fix processing time is required. RTOS is a time-sharing system based on clock interrupts that implement priority sequences to execute a process. In the event of a high priority, interrupt is generated by the system, the running low priority processes are stopped and the interrupt is served. The real-time operating system requires less operational memory and synchronizes the processes in such a way they can communicate with each other hence resources can be used efficiently without wastage of time.

COMPARISON

Size

The major difference between Embedded Linux and RTOS is in their sizes. RTOS running on an AVR requires approximately 4.4 kilobytes of ROM. Embedded Linux, on the other hand, is relatively larger. The kernel can be stripped of which are not required and even with that, the footprint is generally measured in megabytes.

Embedded Linux RAM requirement is in order of few megabytes. In practical applications, it requires more than that because some other tasks run under these Linux kernels. RTOS has much smaller memory requirements than Linux. A very simple setup, running two tasks, a scheduler, a queue for communication and a semaphore on an 8-bit architecture would use in the vicinity of 200 bytes.

Scheduler

The scheduler in an RT-system is important to ensure that tasks complete in a fixed time. Compared to a regular scheduler for a general-purpose system, it is not the main task of the scheduler to ensure ’fair’ distribution of CPU-time. A common technique is simply to let the task with the highest priority run before all tasks with lower priority. It works fine for a soft real-time system but for hard real-time, the system must provide a better guarantee.

RTOS scheduler

RTOS uses the highest priority first scheduler. It means that the task having the highest priority is always running. This is achieved by having a preemptive scheduler that at a tick-interrupt decides if the currently running task is allowed to continue executing or it needs to be switched for another task based on priority. The scheduler uses the priority to schedule the task with the highest priority. Tasks having the same priority are given a “fair” process time. This schedular allows us to achieve soft real-time but it is difficult to achieve hard real-time by not having any kind of deadline-based scheduling.

For this purpose, there are choices of having a preemptive or a cooperative scheduler. In preemptive mode, a task can be preempted unlike in cooperative mode where it’s up to all tasks to give away the CPU “often” enough so higher priority tasks get to run. Typical RTOS real-time kernel achieves scheduler latencies from zero to a few microseconds.

Embedded Linux scheduler

In Embedded Linux, there are more choices to choose the scheduler. The modular of Embedded Linux allows to change different parts of the system. A simple insmod gives the possibility to change the scheduler. There are a couple of schedulers designed for different things.

First of all, it has a basic highest priority first scheduler that uses the priority of a task and schedules it first. Embedded Linux also implements the Earliest deadline first which uses the periodic feature of Embedded Linux. Assuming that the deadline for every task is when it is next to be run again one can implement a fast EDF. In theory, it is optimal since it can schedule tasks to 100% CPU-usages. In practice, it is not the same due to some overheads. As in idle process Embedded Linux runs a usual Linux kernel and when there are no rt-tasks that can run, Linux gets to run. which can lead to starvation of Linux and thus effectively disabling Linux. But the importance of a real-time system is to run the real-time tasks this is not a big problem for the system. Typical latencies in real-time Linux schedular are in the order of tens to hundreds of microseconds.

CPU resource

Embedded Linux requires a significant amount of CPU resources, perhaps >200MIPS, 32bit processor, ideally with an MMU, 4Mb of ROM and 16MB of RAM and boot may take several seconds.

An RTOS, on the other hand, runs in less than 10Kb, on microcontrollers from 8-bit up and boot in milliseconds.

IoT Implementation of OS

Embedded Linux is often preferred for extremely low-power applications, such as sensors, run for months on batteries. The low-power nature often precludes direct IP connectivity which serves as a gateway for Internet connectivity. The gateway communicates the low-power protocol to the sensors and would translate them to IP. Linux may have an existing protocol to fulfill the requirements.

The basic requirement of an IoT device is network connectivity, typically in the form of IP via a web server. An RTOS can offer IP connectivity but have a risk to be buggy unless you examine it. For example, usually, RTOSs do not isolate the IP stack user from the IP stack itself. Network connectivity requires potentially dealing with low speed or congested links which can lead to obscure and hard-to-debug buffer handling issues when the stack is intermingled with other code. On the other hand, an embedded Linux leverages hardware separation and a widely utilized IP stack that probably has been exposed to corner cases.

Security is essential in IoT devices, which are often exposed to open Internet. A system compromise on the Internet interface is prone to intruders and information or control of the device can be hijacked. Developers can leverage native, embedded Linux features—multiuser, SELinux, and containers—to contain and limit the damage.

Linux certainly is a robust and secure OS and the system has matured in an embedded operating system. Yet one of the drawbacks is its Memory footprint when compared to a real-time operating system even though it can be trimmed down by removing tools and system services that are not required in embedded systems, it still is a large software. It simply cannot run on 8 or 16-bit MCUs and requires more onboard RAM for the Linux kernel. For example, ARM Cortex-M architecture based MCUs, which typically have only a few hundred kilobytes of RAM, and Linux cannot run on these chips.

A common engineering solution for networked systems is to use two processors in the device. In this arrangement, an 8 or 16-bit MCU is used for the sensor or actuator, while a 32-bit processor is used for the network interface which runs an RTOS. Sales of 32-bit MCUs have exploded in the last several years, and have become the largest segment of the MCU market.

When I work on a development project, I’ve become a big fan of using development boards that have the Arduino headers on them. The vast number of shields that easily connect to these headers is phenomenal. The one problem that I’ve always had though was that there is always a need to use a breadboard to test a circuit or integrate a sensor that just isn’t in an Arduino header format. The result is a wiring mess that can result in loose or missing connections.

I was recently talking with Max Maxfield and he pointed me to a really cool adapter board designed to remove these wiring jumpers to a breadboard. Max wrote about this board here but I’m so excited about this that I thought I’d add my two cents as well.

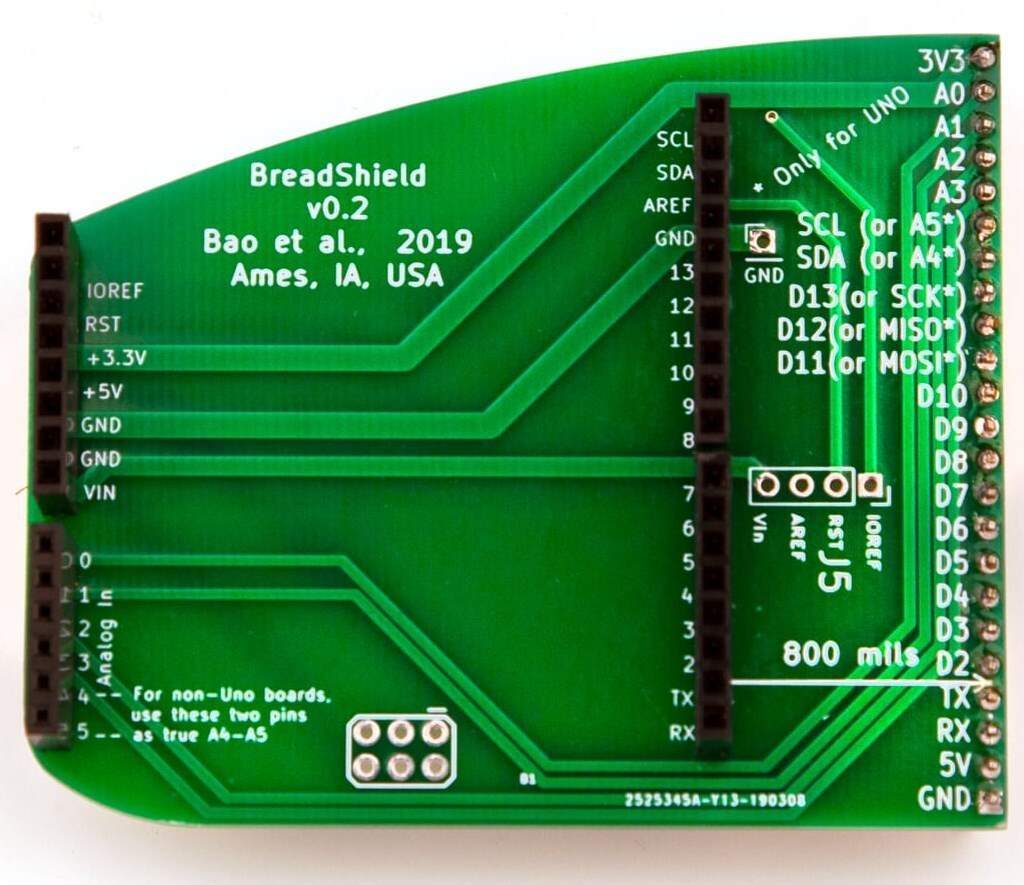

The BreadShield, which can be purchased at https://www.crowdsupply.com/loser/breadshield, adapts the Arduino headers to a linear set of header pins designed to be plugged into a breadboard. You can see in the image below that this completely removes all the extra jumpers that one would normally require which has the potential to remove quite a few jumper wires.

When I heard about these, I purchased three assembled units for about $28 which saves me the time from having to assemble the adapter myself. DIY assembly runs for about $15 for a set of three boards. Either way, a great price to remove a bunch of wires from the workbench.

Now I’m still waiting for mine to arrive, but from the image, you can see that the one challenge to using these adapters might be adapting the height of your breadboard to your hardware stack. While this could be an issue, I keep various length spacers around the office so that I can adapt board heights and undoubtedly there will be a length that will ensure these line up properly.

You can view the original post here

Does anyone remember in-circuit emulators (ICEs)?