The concepts of AI and ML have been widely discussed and these concepts are also inspiring many entrepreneurs to invest in such products. This huge amount of investment brings a huge opportunity for young people to learn these concepts and get a full-time job in the same field.

To help you to get a full-time job in this field, we bring the top best concepts to learn before getting started with AI and machine learning. But before that, let's learn about the concepts of Artificial intelligence and machine learning.

About AI

AI stands for artificial intelligence which is expected to replace current man-force with accuracy. There are two main concepts of Artificial Intelligence and these concepts are Machine learning and deep learning. Both of these concepts are used to predict an out based on the data it has. Many big organizations have been implementing these concepts over the years successfully for the finance, gaming, technology, and image processing industries. In the typical machine learning product, you will train a model with a huge amount of data. Once the model is fully trained, the model will be able to decide on its own. A deep learning model contains a huge amount of data while the machine learning model contains comparatively less data but it is also equally amazing.

Below are the top best concepts that you must learn before learning all other AI/ML concepts.

1) Binary translation

A binary number is nothing but a two-bit number that contains just zeros and ones. All the advanced and traditional electronics systems understand just binary numbers. These systems cannot understand any other language apart from the binary. For that reason, it becomes really important for the software engineer to learn and understand the concepts of the binary conversion tool.

Along with these concepts, you can also learn about other numbering systems like hexadecimal, decimal, and octal numbers. Among these numbers, decimal numbers are the easiest numbers to learn and understand. Most apps and software that requires human involvement, need to be written in decimal number in the back-end and binary numbers on the front-end.

2) Programming

Most of the Machine learning and deep learning apps are written in the Python programming language. Though there are a lot of ready-made libraries and frameworks available in the Python programming language, you need basic programming skills to implement this framework on your project. You can start learning these concepts from the c language and then start learning the python programming language.

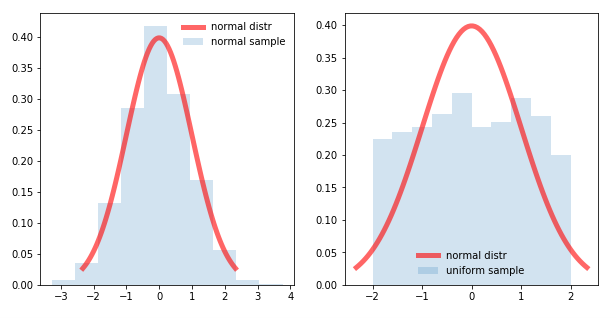

3) Probability in maths

The math concepts are not that important for programming but they can be helpful in machine learning and deep learning concepts especially probability concepts. In deep learning, you need to deal with a lot of data, and to search from this data you need to develop a tool that implements probability applications for a faster search.

So, these are the top three concepts that you must learn before developing your first AI/ML app to get the best job in the field. Do share your thoughts on this subject.