by Stephanie Overby

What's next for edge computing, and how should it shape your strategy? Experts weigh in on edge trends and talk workloads, cloud partnerships, security, and related issues

All year, industry analysts have been predicting that that edge computing – and complimentary 5G network offerings – will see significant growth, as major cloud vendors are deploying more edge servers in local markets and telecom providers pushing ahead with 5G deployments.

The global pandemic has not significantly altered these predictions. In fact, according to IDC’s worldwide IT predictions for 2021, COVID-19’s impact on workforce and operational practices will be the dominant accelerator for 80 percent of edge-driven investments and business model change across most industries over the next few years.

First, what exactly do we mean by edge? Here’s how Rosa Guntrip, senior principal marketing manager, cloud platforms at Red Hat, defines it: “Edge computing refers to the concept of bringing computing services closer to service consumers or data sources. Fueled by emerging use cases like IoT, AR/VR, robotics, machine learning, and telco network functions that require service provisioning closer to users, edge computing helps solve the key challenges of bandwidth, latency, resiliency, and data sovereignty. It complements the hybrid computing model where centralized computing can be used for compute-intensive workloads while edge computing helps address the requirements of workloads that require processing in near real time.”

Moving data infrastructure, applications, and data resources to the edge can enable faster response to business needs, increased flexibility, greater business scaling, and more effective long-term resilience.

“Edge computing is more important than ever and is becoming a primary consideration for organizations defining new cloud-based products or services that exploit local processing, storage, and security capabilities at the edge of the network through the billions of smart objects known as edge devices,” says Craig Wright, managing director with business transformation and outsourcing advisory firm Pace Harmon.

“In 2021 this will be an increasing consideration as autonomous vehicles become more common, as new post-COVID-19 ways of working require more distributed compute and data processing power without incurring debilitating latency, and as 5G adoption stimulates a whole new generation of augmented reality, real-time application solutions, and gaming experiences on mobile devices,” Wright adds.

8 key edge computing trends in 2021

Noting the steady maturation of edge computing capabilities, Forrester analysts said, “It’s time to step up investment in edge computing,” in their recent Predictions 2020: Edge Computing report. As edge computing emerges as ever more important to business strategy and operations, here are eight trends IT leaders will want to keep an eye on in the year ahead.

1. Edge meets more AI/ML

Until recently, pre-processing of data via near-edge technologies or gateways had its share of challenges due to the increased complexity of data solutions, especially in use cases with a high volume of events or limited connectivity, explains David Williams, managing principal of advisory at digital business consultancy AHEAD. “Now, AI/ML-optimized hardware, container-packaged analytics applications, frameworks such as TensorFlow Lite and tinyML, and open standards such as the Open Neural Network Exchange (ONNX) are encouraging machine learning interoperability and making on-device machine learning and data analytics at the edge a reality.”

Machine learning at the edge will enable faster decision-making. “Moreover, the amalgamation of edge and AI will further drive real-time personalization,” predicts Mukesh Ranjan, practice director with management consultancy and research firm Everest Group.

“But without proper thresholds in place, anomalies can slowly become standards,” notes Greg Jones, CTO of IoT solutions provider Kajeet. “Advanced policy controls will enable greater confidence in the actions made as a result of the data collected and interpreted from the edge.”

2. Cloud and edge providers explore partnerships

IDC predicts a quarter of organizations will improve business agility by integrating edge data with applications built on cloud platforms by 2024. That will require partnerships across cloud and communications service providers, with some pairing up already beginning between wireless carriers and the major public cloud providers.

According to IDC research, the systems that organizations can leverage to enable real-time analytics are already starting to expand beyond traditional data centers and deployment locations. Devices and computing platforms closer to end customers and/or co-located with real-world assets will become an increasingly critical component of this IT portfolio. This edge computing strategy will be part of a larger computing fabric that also includes public cloud services and on-premises locations.

In this scenario, edge provides immediacy and cloud supports big data computing.

3. Edge management takes center stage

“As edge computing becomes as ubiquitous as cloud computing, there will be increased demand for scalability and centralized management,” says Wright of Pace Harmon. IT leaders deploying applications at scale will need to invest in tools to “harness step change in their capabilities so that edge computing solutions and data can be custom-developed right from the processor level and deployed consistently and easily just like any other mainstream compute or storage platform,” Wright says.

The traditional approach to data center or cloud monitoring won’t work at the edge, notes Williams of AHEAD. “Because of the rather volatile nature of edge technologies, organizations should shift from monitoring the health of devices or the applications they run to instead monitor the digital experience of their users,” Williams says. “This user-centric approach to monitoring takes into consideration all of the components that can impact user or customer experience while avoiding the blind spots that often lie between infrastructure and the user.”

As Stu Miniman, director of market insights on the Red Hat cloud platforms team, recently noted, “If there is any remaining argument that hybrid or multi-cloud is a reality, the growth of edge solidifies this truth: When we think about where data and applications live, they will be in many places.”

“The discussion of edge is very different if you are talking to a telco company, one of the public cloud providers, or a typical enterprise,” Miniman adds. “When it comes to Kubernetes and the cloud-native ecosystem, there are many technology-driven solutions competing for mindshare and customer interest. While telecom giants are already extending their NFV solutions into the edge discussion, there are many options for enterprises. Edge becomes part of the overall distributed nature of hybrid environments, so users should work closely with their vendors to make sure the edge does not become an island of technology with a specialized skill set.“

4. IT and operational technology begin to converge

Resiliency is perhaps the business term of the year, thanks to a pandemic that revealed most organizations’ weaknesses in this area. IoT-enabled devices (and other connected equipment) drive the adoption of edge solutions where infrastructure and applications are being placed within operations facilities. This approach will be “critical for real-time inference using AI models and digital twins, which can detect changes in operating conditions and automate remediation,” IDC’s research says.

IDC predicts that the number of new operational processes deployed on edge infrastructure will grow from less than 20 percent today to more than 90 percent in 2024 as IT and operational technology converge. Organizations will begin to prioritize not just extracting insight from their new sources of data, but integrating that intelligence into processes and workflows using edge capabilities.

Mobile edge computing (MEC) will be a key enabler of supply chain resilience in 2021, according to Pace Harmon’s Wright. “Through MEC, the ecosystem of supply chain enablers has the ability to deploy artificial intelligence and machine learning to access near real-time insights into consumption data and predictive analytics as well as visibility into the most granular elements of highly complex demand and supply chains,” Wright says. “For organizations to compete and prosper, IT leaders will need to deliver MEC-based solutions that enable an end-to-end view across the supply chain available 24/7 – from the point of manufacture or service throughout its distribution.”

5. Edge eases connected ecosystem adoption

Edge not only enables and enhances the use of IoT, but it also makes it easier for organizations to participate in the connected ecosystem with minimized network latency and bandwidth issues, says Manali Bhaumik, lead analyst at technology research and advisory firm ISG. “Enterprises can leverage edge computing’s scalability to quickly expand to other profitable businesses without incurring huge infrastructure costs,” Bhaumik says. “Enterprises can now move into profitable and fast-streaming markets with the power of edge and easy data processing.”

6. COVID-19 drives innovation at the edge

“There’s nothing like a pandemic to take the hype out of technology effectiveness,” says Jason Mann, vice president of IoT at SAS. Take IoT technologies such as computer vision enabled by edge computing: “From social distancing to thermal imaging, safety device assurance and operational changes such as daily cleaning and sanitation activities, computer vision is an essential technology to accelerate solutions that turn raw IoT data (from video/cameras) into actionable insights,” Mann says. Retailers, for example, can use computer vision solutions to identify when people are violating the store’s social distance policy.

7. Private 5G adoption increases

“Use cases such as factory floor automation, augmented and virtual reality within field service management, and autonomous vehicles will drive the adoption of private 5G networks,” says Ranjan of Everest Group. Expect more maturity in this area in the year ahead, Ranjan says.

8. Edge improves data security

“Data efficiency is improved at the edge compared with the cloud, reducing internet and data costs,” says ISG’s Bhaumik. “The additional layer of security at the edge enhances the user experience.” Edge computing is also not dependent on a single point of application or storage, Bhaumik says. “Rather, it distributes processes across a vast range of devices.”

As organizations adopt DevSecOps and take a “design for security” approach, edge is becoming a major consideration for the CSO to enable secure cloud-based solutions, says Pace Harmon’s Wright. “This is particularly important where cloud architectures alone may not deliver enough resiliency or inherent security to assure the continuity of services required by autonomous solutions, by virtual or augmented reality experiences, or big data transaction processing,” Wright says. “However, IT leaders should be aware of the rate of change and relative lack of maturity of edge management and monitoring systems; consequently, an edge-based security component or solution for today will likely need to be revisited in 18 to 24 months’ time.”

Originally posted here.

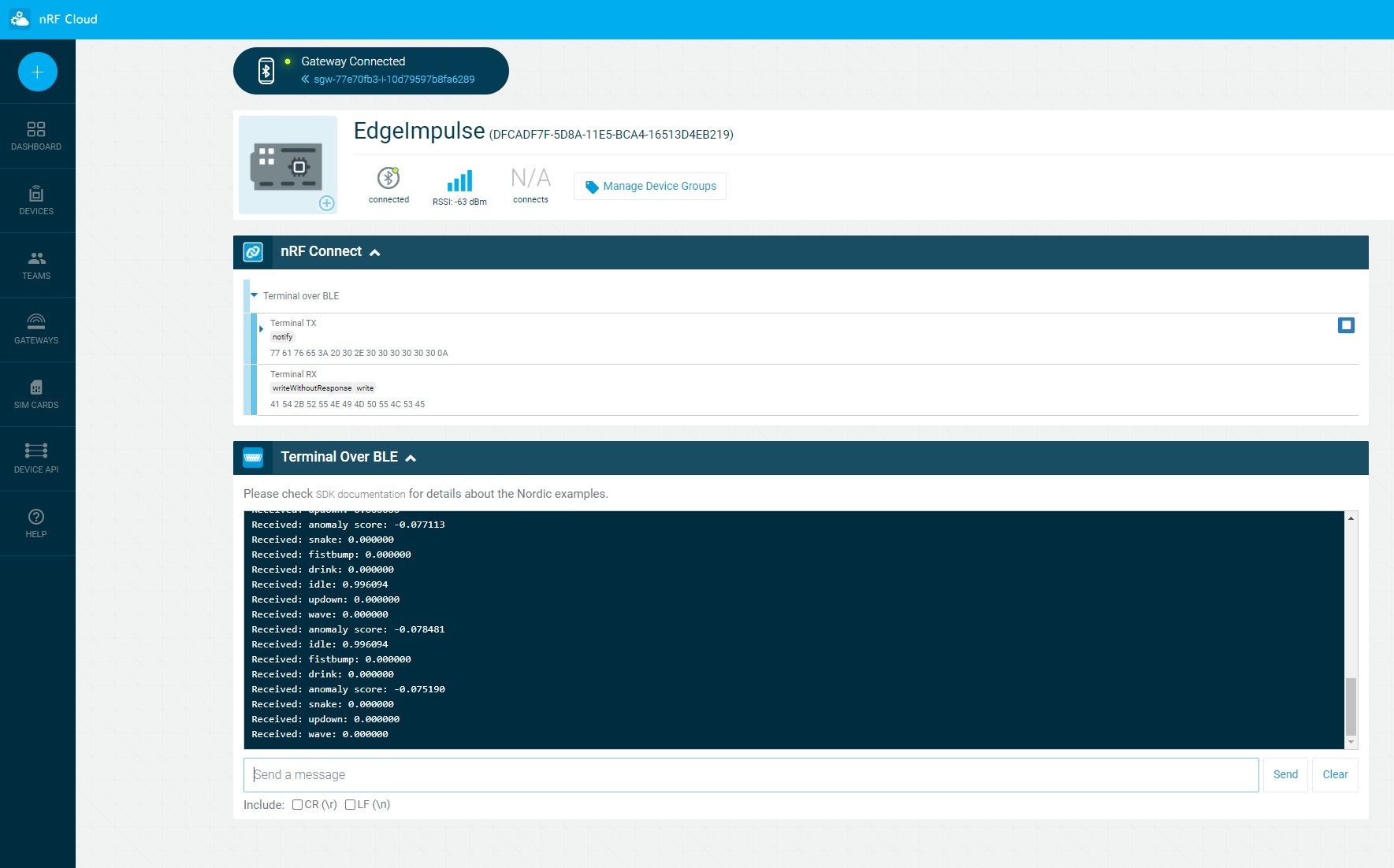

Figure 3. Classification Output Shown in nRF Cloud

Figure 3. Classification Output Shown in nRF Cloud